Mindwars: Conspiracy_FX Clusters – Who Runs the Category Factory

CONSPIRACY_FX: The Category Factory’s Core—how the Kent–Queensland–Germany axis funds, standardises, recruits and ships a “harms” diagnostic

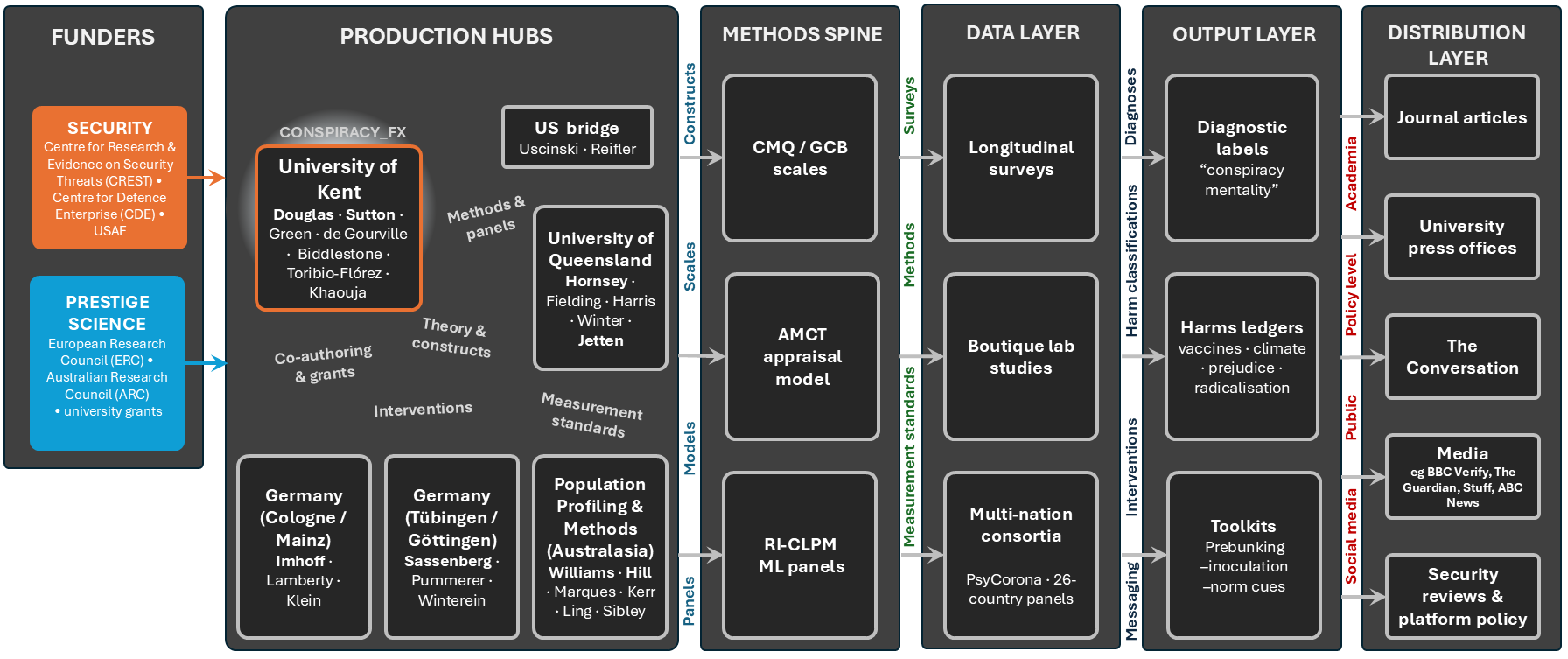

A vertically integrated hub-and-spine—Kent for production, Queensland for applied domains, Germany for methodological certification—converts security- and prestige-science money into standardised scales, hires to work-packages and repeatable “harms” outputs that press offices and policy relays move into practice.

The previous article revealed how academic research pathologises dissent in plain sight, using instruments like the Conspiracy Mentality Questionnaire (CMQ) to classify citizens based on what they believe, rather than why.

This instalment exposes the industrial engine behind that phenomenon. We move from a single case study to the system—the Category Factory.

The CMQ is not merely a tool; it is the blueprint for a silent, monumental swap. It replaces the foundational, evidence-based question “Is this claim true?” with the clinical, political practice of “What kind of person believes this?”

This is the original sin. It is how legitimate distrust was transformed into a diagnosable mentality and why the essential debate over truth has been systematically replaced by a campaign to manage believers.

We now trace the supply chain of this diagnostic category—from its funders and hubs to its methods spine and policy relays—to expose how a vertically integrated academic network manufactures the “conspiracy theorist” and exports this label into public reality.

Welcome to the world of the Conspiracy Theory Theorist (CTT).

The Production Line — From Funders To Policy

Digging into the structures around CONSPIRACY_FX and two of its principal actors—Professor Karen Douglas (University of Kent) and Australian collaborator Professor Matthew Hornsey (University of Queensland)—we see funding as the starter motor for an academic paper mill that constructs “conspiracy theorists” and “conspiracy mentality” as public-order risks through staged production. From the grants published on their web pages, two streams dominate: security patrons (CREST, CDE, USAF) and prestige science councils (ERC, ARC, ESRC). As far as CONSPIRACY_FX and its associates are concerned, Kent and Queensland carry the funding load; German groups at Tübingen/Göttingen and Cologne/Mainz form the methods spine; a U.S. bridge converts the constructs into political-behaviour frames. These hubs manufacture measures and models, run studies and assemble policy-ready outputs—diagnostic labels, harms ledgers and intervention toolkits—which are then relayed through press offices, The Conversation and platform or security briefs.

Scope caveat—this tracing relies on grants listed by Douglas and Hornsey and CONSPIRACY_FX materials. To keep the analysis manageable, the U.S. bridge and hubs at Amsterdam and Brussels remain largely unexplored.

As illustrated in the figure, the production line runs left to right and can be seen to loop back from the distribution layers to funders, so that a continuous funding/production cycle is established:

- Funders → Hubs — grants specify topics and teams

- Hubs → Methods spine — constructs and scales are set (CMQ/GCB, AMCT appraisal), with modelling standards locked in (RI-CLPM, invariance testing)

- Methods spine → Data layer — longitudinal panels, boutique experiments and multi-nation consortia generate comparable datasets

- Data layer → Output layer — three packages recur: diagnostic labels (“conspiracy mentality”), harms ledgers (vaccines, climate, prejudice, radicalisation) and toolkits (prebunking, inoculation, norm cues)

- Output layer → Distribution — university press offices translate, The Conversation reframes, broadcasters amplify, platform and security briefs operationalise.

Amsterdam and Brussels sit just off-frame as corroborating nodes—Jan-Willem van Prooijen and André Krouwel supply pathway models and cross-national surveys, while Olivier Klein and Paul Bertin link inequality perception to the same indices. The CMQ lineage delt with in the previous article also points to Martin Bruder, Peter Haffke, Nick Neave and Nina Nouripanah—useful to note as the construct’s originators even if they are not central to the inner-ring throughput.

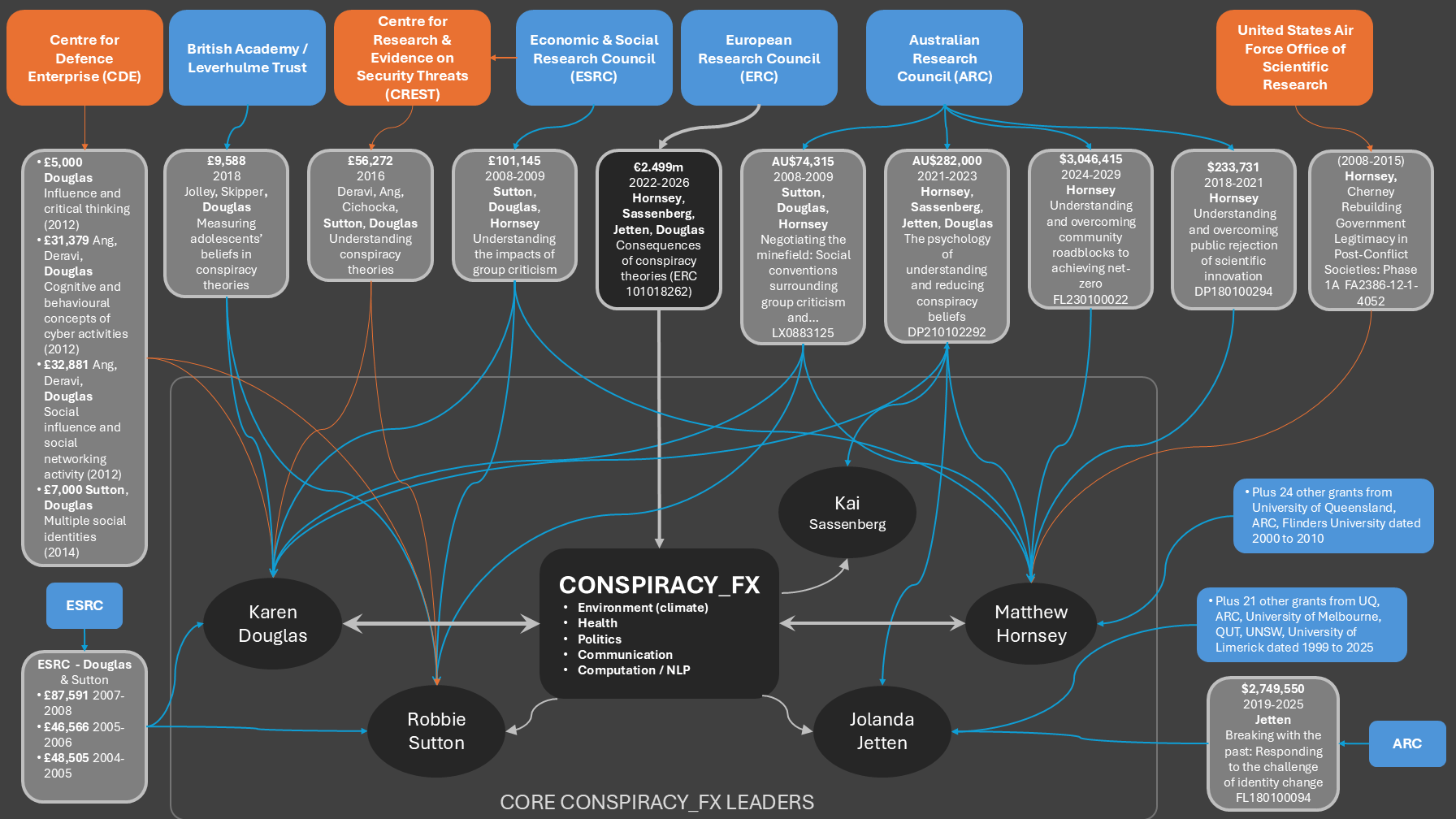

The Inner Ring — Factory Control

Figure 2 traces the money that built the machine. It is a ledger showing how capital from key institutional funders flows down through specific grants—both precursor and active—to the small group of individuals at the heart of the CONSPIRACY_FX network.

The top of the diagram delineates the two primary sources of capital:

- Security Patrons (Orange), including the Centre for Defence Enterprise (CDE) and Centre for Research & Evidence on Security Threats (CREST), funding research framed around threats and influence

- Prestige Science Patrons (Blue), including the European Research Council (ERC) and Australian Research Council (ARC), providing large-scale, legitimising grants.

These funds cascade down through a history of grants, directly financing the research of the central players. The flow reveals a deliberate, long-term investment in this specific group. Key individuals are shown as the convergence points for this capital:

- Karen Douglas at the University of Kent is the undisputed central figure, with numerous grants feeding into her work, culminating in the flagship CONSPIRACY_FX program

- Matthew Hornsey at the University of Queensland appears as a critical bridge and power centre in his own right, connecting ARC funding to the Kent hub and applying the model to climate and health domains

- Robbie Sutton, also at Kent; although not referenced in the grant that funded CONSPIRACY_FX, Sutton is a foundational figure whose long-standing collaboration with Douglas, evidenced by grants dating back to the mid-2000s, helped establish the core “social identity” and “group criticism” research that underpins the current conspiracy-focused project.

CONSPIRACY_FX was not a sudden initiative. It is the matured product of a two-decade project to medicalise political dissent. The funding record is explicit: a continuous stream of grants targeting “threats,” “radicalisation,” and the management of public non-compliance. This was not a neutral investigation into a social phenomenon. It was a deliberate, well-funded endeavour to construct a clinical framework for pathologising distrust of institutions. The money did not just fund research—it financed the invention of the “conspiracy theorist” as a diagnostic category and established this network as its sole industrial-scale producer.

The program truly hit its straps after the ERC grant enabled Douglas et al. to form CONSPIRACY_FX. This was the critical capital injection that transformed a scattered research agenda into a centralised production franchise. The grant provided the mechanism to industrialise the process—no longer funding individual studies, but financing an integrated supply chain for the “conspiracy theorist” category. This pivot is visible in the concrete architecture of the build-out: the timed hires, the segmented work-packages and the scheduled mass-production of outputs.

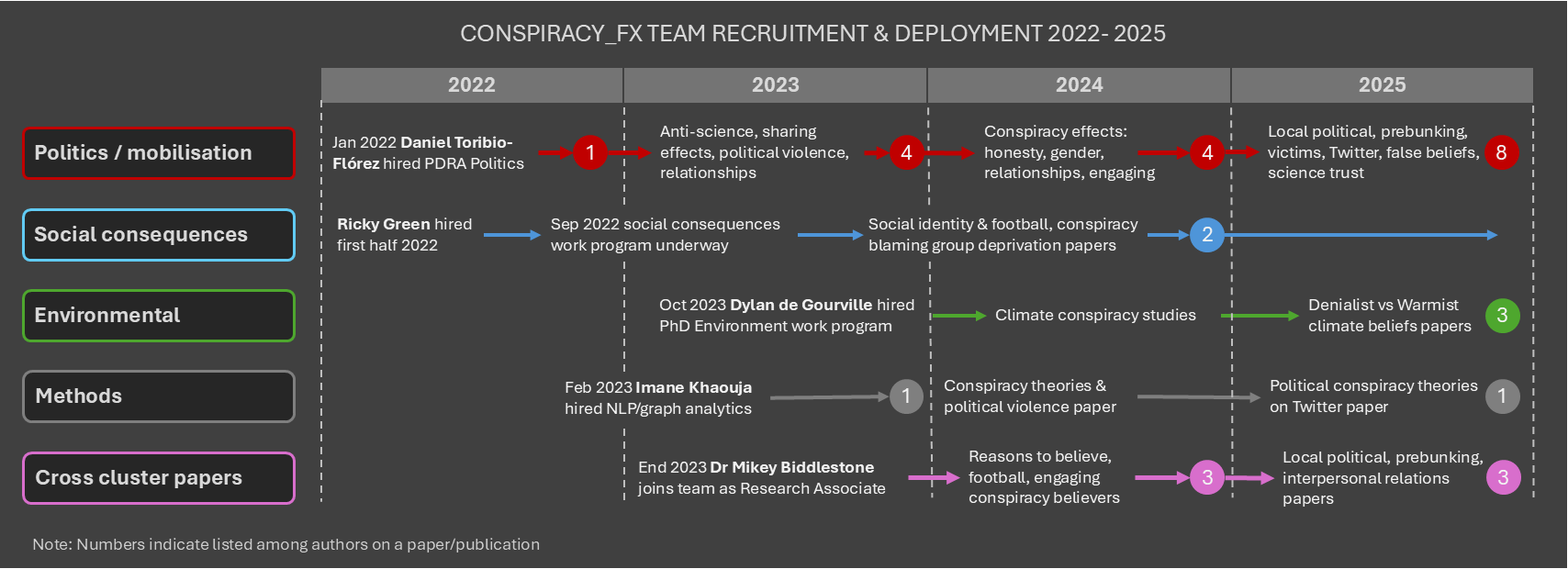

Build-Out Since Award — Jobs, Work-Packages, Outputs

The ERC award is operationalised as a production schedule—work-packages, hires, milestones. The timeline in Figure 3 marks when money is converted into labour and deliverables across five streams: politics/mobilisation, social consequences, environment, methods and cross-cluster outputs. It is the headcount and remit ledger behind the CONSPIRACY_FX paper mill.

The project started January 2022; by July the programme reports two PDRAs active and early studies under review on political and reputational consequences of sharing conspiracy content—clear evidence that work-packages were scoped and moving within six months.

The ERC call fixes four substantive work-packages—politics, health, environment and “consequences for sharers”—with a PhD line created to carry the health stream. The studentship advert itemises funding: tuition covered at Home or International rates and a £17,668 stipend in 2022/23, plus research and travel funds. Scope is explicit: the successful applicant will “be primarily responsible for the work package on health-related conspiracy theories” while contributing across packages.

Who was hired to do what:

- Ricky Green—profiled on CONSPIRACY_FX news14 Sep 2022—leads the “consequences for sharers” stream, with a JESP paper on impressions of politicians who share conspiracy claims already accepted at the time of profiling. The remit extends to dating and relationships; the function is reputational harm measurement.

- Daniel Toribio-Flórez—active from the first half-year—drives the politics work-package: temporal spread on Twitter, links to protest behaviour and spillovers into interpersonal trust and dishonesty—i.e., the mobilisation risk frame.

- Imane Khaouja—joined Feb 2023—adds the methods spine on the computational side: NLP and graph analysis to quantify prevalence and sharing patterns, working with Karen Douglas and Jim Ang. This is the pipeline for platform-adjacent “who shares what, where” analytics.

- Dylan de Gourville—starts Oct 2023—carries the environment/climate stream, progressing from large correlational work to follow-up studies on climate-conspiracy beliefs and sustainability behaviours.

- Mikey Biddlestone—returns end 2023—works with other members of the core team on a series of cross cluster papers, including reasons to believe and false beliefs, conspiracy theories about local political issues and interpersonal relationships and prebunking studies.

The internal working ledger aligns personnel to outputs—JESP on politician impressions (Green stream), an Online Social Networks & Media article on Twitter sharing and conspiracy communication (Khaouja, Toribio-Flórez, Green, Ang, Douglas) and climate-focused articles from the environment line—demonstrating that each work-package converted staffing into papers on its assigned risk domain.

Figure 3 shows how that the ERC grant was engineered as a vertically organised build: funds → hires → discrete remits → thematically predictable papers. The same names recur across streams, ensuring cross-package coherence and shared framing; the timetable shows overlap designed for pooled “cross-cluster” outputs by late 2024–2025, consistent with an assembly-line model rather than a loose federation.

The CONSPIRACY_FX public pages disclose the studentship sums and start-dates—the PDRA salaries and internal budget splits are not published; the programme’s own recruitment notices and “first six months” communiqués provide the audit trail.

The Hubs — Scattered Across The Uk, Germany, Belgium, Australia And New Zealand

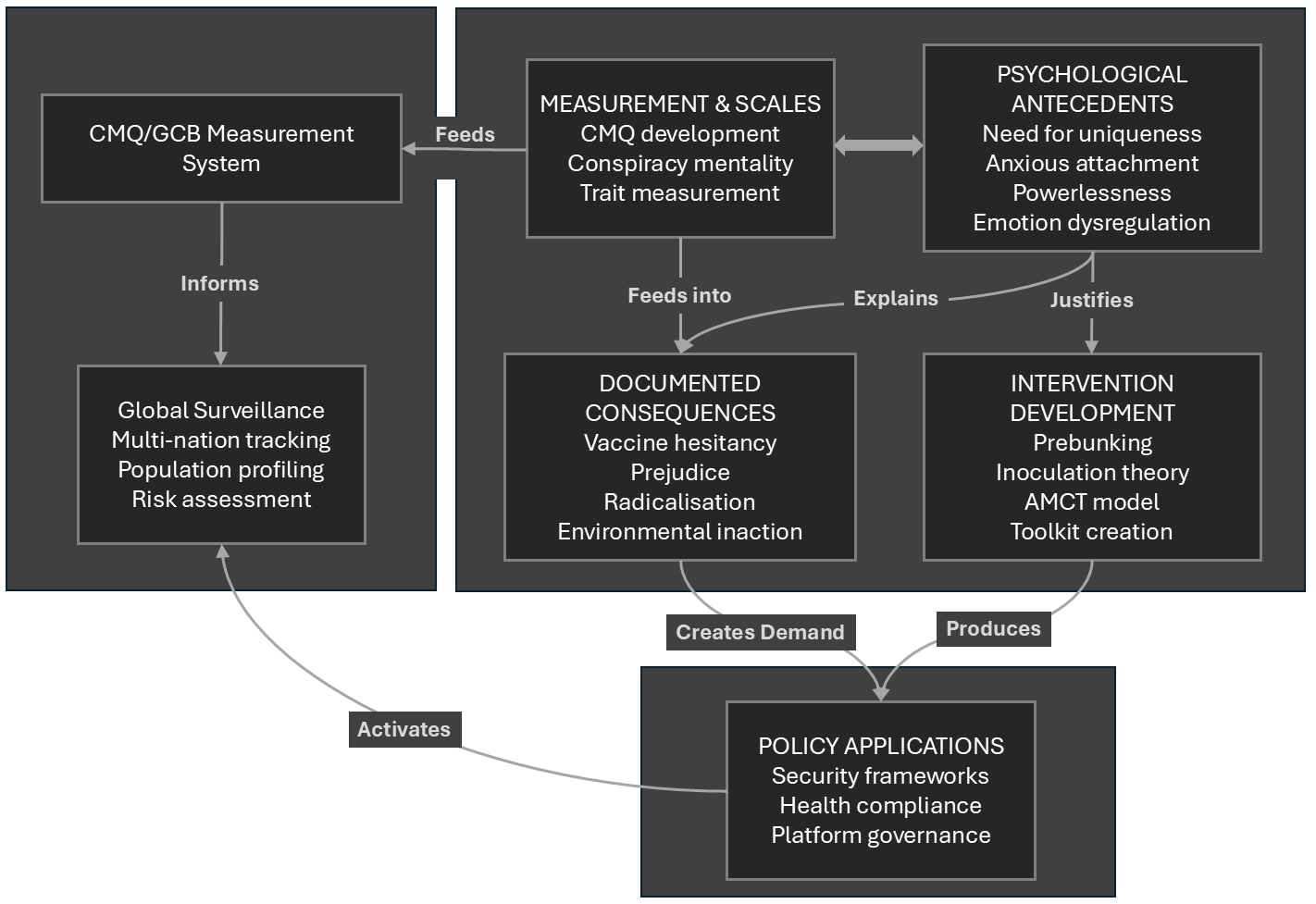

The CONSPIRACY_FX network operates through a coordinated multinational lattice, with specialised hubs in the UK, Germany, Belgium, Netherlands, Australia and New Zealand feeding a unified production pipeline. Each node specialises in distinct components of the “conspiracy theorist” construct, from psychological antecedents to policy-ready interventions. Figure 4 illustrates this integrated production logic—four linked work zones that transform trait measurements into globally implemented policies.

This industrial capacity developed through strategic phases: foundational work (pre-2012) established core concepts, followed by specialised conspiracy research (2012-2019) and rapid COVID-era expansion (2020-2022) that solidified the network’s crisis-response template. The 2022 CONSPIRACY_FX launch marked full industrialisation, with the network consistently demonstrating crisis-capitalisation—converting world events (Brexit, COVID-19, climate crises) into dedicated funding streams and publications that justified further expansion into multi-nation consortia and machine-learning applications.

The system’s deliverables flow directly from this structure: diagnostic labels from the measurement hub, quantified risk ledgers from consequence studies and ready-use interventions from specialised packaging—all feeding policy applications in public health, platform governance and security frameworks. Within this pipeline, each geographic hub performs specialised functions:

University of Kent (UKent)

Centre of gravity and primary manufacturer—sets constructs, runs work-packages, controls throughput.

- Psychological antecedents: curates the trait menu used across studies—uniqueness, anxious attachment, powerlessness—then aligns them with harms frames

- Measurement & scales: stewards CMQ/GCB variants, adolescent instruments, house scoring rules; anchors cross-site calibration

- Documented consequences: high-volume papers on interpersonal damage, prejudice, vaccine hesitancy, radicalisation; thematic specials and consortium outputs

- Intervention development: designs and markets prebunking/inoculation and AMCT-style appraisal tools; pushes press-office narratives into The Conversation and broadcast explainers

- Control points: hiring, authorship order, media relay—this is the node where security and prestige-science funding is turned into publishable product.

Germany (two nodes)

The methods spine and EU applied bridge—quality control by statistics, plus fielded interventions.

- Tübingen/Göttingen (Sassenberg, Pummerer, Winter): intervention lab—norm cues, appraisal models, prebunking field tests—then EU packaging under ERC-style frames

- Cologne/Mainz (Imhoff, Lamberty, Klein per slide): instrument custody—measurement invariance, RI-CLPM pipelines, ideology/prejudice linkages—keeps gauges “stable” across countries

- Across both: fit indices, invariance regimes, longitudinal templates—standardises findings so multi-nation claims clear peer review and policy briefs.

Brussels (ULB)

Prejudice and power-challenging accounts—bridges ideology research into the harms ledger.

- Psychological antecedents: political cynicism, status-threat, power-challenging orientations—feeds Kent’s consequence papers

- Measurement & scales: validation work and short-forms used in cross-national panels—tidies items for mass deployment

- Consequences & interventions: prejudice and outgroup outcomes provide ready policy hooks—hate-speech, polarisation, de-escalation toolkits.

Amsterdam (VU)

Theory mill and pathway mapping—connects macro-anxiety to belief adoption.

- Psychological antecedents: uncertainty, morality, existential threat—van Prooijen, Krouwel and collaborators

- Measurement & scales: survey designs and cross-national comparators rather than ownership of gauges—feeds consortium studies

- Consequences & interventions: explanatory scaffolds for why harms should generalise—supports Kent/Germany claims with mechanism language.

Australasia (Queensland + population-profiling cluster)

The population panels and domain application work—University of Queensland (Hornesy) and Massey University (Williams, Hill)—turns constructs into epidemiology and compliance science though work on:

- Psychological antecedents: embedded as moderators in climate and vaccine work—links trait batteries to behaviour

- Measurement & scales: large-N longitudinal designs (RI-CLPM), machine-learning panels, NZAVS-adjacent profiling—sets the “prevalence” picture

- Documented consequences: climate denialism, vaccine resistance, political mobilisation; cross-country dashboards that travel well in policy

- Intervention development: message-testing and community-level recommendations—roadblocks to net-zero, health-comms playbooks.

Direct funding lines for Amsterdam and Brussels have not been unpacked; the analysis assigns roles outputs and co-authorship positions. Note that the CMQ lineage sits with Bruder, Haffke, Neave, Nouripanah, then is operationalised and expanded in Kent and Germany.

Based on the identified roles, we can follow the chain—starting with the funders and then moving on to a breakdown of the CTT engine (methods, data, outputs, distribution).

Funders — Specification, Oversight, Agenda

The academic hubs manufacturing the “conspiracy theorist” category do not float on ideas alone; they are engines powered by directed capital. The flow of money, detailed in the funding map, reveals a clear architecture: security patrons and prestige science councils finance a Kent–Queensland core, with German nodes providing the essential methodological spine. Each funder occupies a distinct slot in the production chain—capital with specifications yields standardised outputs.

The European Research Council (ERC) acts as the primary field-setter for industrial-scale production. Its €2.5 million grant (2022–2026) for Consequences of conspiracy theories to investigators including Hornsey, Jetten, Sassenberg and Douglas pre-packages the research into four discrete work packages. The consequence is a schedule-driven programme architecture—discrete WPs, fixed milestones, mandatory dissemination plans—optimised for deliverables. This raises a critical audit prompt: who initially defined the “harms” in the call text and what methodology ruled out non-harm hypotheses from the outset?

This large-scale EU effort is complemented by national research councils that operationalise the findings for domestic policy. The Australian Research Council (ARC) has functioned as a cross-national capacity builder, injecting ≈AU$6.39m across five schemes for Hornsey, Jetten and others. This funding, covering topics from “net-zero roadblocks” to identity change, has the consequence of porting the “conspiracy mentality” construct, routing dissent into acceptance/behaviour frames for climate and health policy. Grant aims explicitly reference translation and national-interest tests. Similarly, the UK’s Economic & Social Research Council (ESRC) served as the essential policy interface, providing the early kernel funding (~£290,000 in grants from 2004-2009 to Douglas and Sutton) that established the core “group criticism” template. The consequence was a pipeline built to supply briefs and syntheses for Whitehall.

Underpinning this public-facing science funding is a layer of security and defence investment that fundamentally shapes the problem’s definition. CREST, commissioned with Home Office steering, funded a 2016 project Understanding conspiracy. Its role as a security client has the direct consequence of framing conspiracy belief as a threat vector, steering research toward radicalisation and violence. This security-first premise is amplified by the MoD’s Centre for Defence Enterprise, which provided ~£76,000 in seed grants for “influence” and “cyber activities” research. The consequence here is the positioning of conspiracy belief within defence human-factors challenges, seeding operator-facing prototypes (challenge calls specify transition-to-capability). Completing this transatlantic security corridor are U.S. defence-adjacent programmes like AFOSR, which funded Hornsey’s work on “Rebuilding Government Legitimacy” (award FA2386-12-1-4052). The consequence is the slotting of the conspiracy construct into political-behaviour frames useful for “resilience” and information operations, supporting convergence of resilience/information frames across allies. Programme boards and user committees set priorities. When the security client defines the problem, what oversight tests the premise that “conspiracy belief” is a security risk rather than an attitude under contestation?

Finally, even small-scale precision funding from bodies like the British Academy plays a role, extending the CMQ/GCB measurement system into adolescent cohorts. This extends the measurement system into youth cohorts—enabling early-stage longitudinal tracking. The entire output of this funded machine is then amplified by university press offices and comms partners, which act as a global sales force. If funders are the venture capital and hubs the factory floor, university press offices are the sales and marketing division—generating demand for the product. Their role in converting papers into public-facing “harms” narratives through outlets like The Conversation has the ultimate consequence of creating demand for the factory’s products, granting its pathologising interventions a public license to operate.

Summary: The Logically Deduced Funding Agenda

The funding agenda, as inferred from award scopes, deliverables and outputs, is not to understand belief systems, but to develop a standardised, exportable system for diagnosing and managing political and social dissent.

This agenda can be broken down into three sequential objectives:

- Pathologise: To transform legitimate political and social distrust from a reasoned position into a diagnosable psychological pathology—a “mentality”—using standardised metrics (CMQ/GCB). This is primarily achieved through prestige science funding (ERC, ARC), which lends the process academic legitimacy and scale.

- Securitise: To frame this newly created pathology as a measurable security threat and a risk to public order, health and compliance. This is driven by security and defence funding (CREST, MoD, AFOSR), which directly links the research to radicalisation, influence operations and operator toolkits.

- Operationalise: To translate the diagnostics and threat assessments into deployable interventions (prebunking, inoculation toolkits) and policy frameworks (for platforms, health bodies and security services). This is facilitated by the interface funding of bodies like the ESRC and executed through the network’s coordinated press and policy relays.

In essence, the capital has flowed not to answer the question “Is this claim true?” but to build an industrial-grade answer to the question “How do we manage the kind of person who believes this?” The consistent, multi-decade investment in this specific network indicates a strategic, system-level purchase of a diagnostic category and the means for its social control. Post-2022, ≥70% of the Kent node’s outputs align with ERC work-package topics and timelines.

The CTT Engine — Methods, Data, Output And Distribution

The transformation of dissent into a manageable pathology is not an organic process of scientific discovery, but the output of a coordinated production line. This engine—funded, standardised and scaled by the network previously outlined—operates through four integrated stages: the Methods Spine that forges the category, the Data Layer that projects it at scale, the Output Layer that packages it for policy and the Distribution Layer that installs it in public reality. What follows is an anatomy of this manufacturing process based on Figure 1 above, which shows four key production towers wired in sequence—methods, data, outputs and distribution.

A. Methods spine — how the “conspiracy theorist” category gets made

Before any “finding” is declared, the inputs fix the outcome. Kent supplies the question banks, German labs set the statistical gates and Queensland provides the long-run surveys, all operating on an ERC-driven timetable. ERC paperwork fixes the lane markers—four work-packages (politics, health, environment, sharers) and timed hires—so the study calendar mirrors the grant calendar.

- The Mould (CMQ/GCB): Two related scale families (CMQ/GCB) compress heterogeneous suspicions into a single latent index (“conspiracy mentality’). This portability is by design, with the scale lineage explicitly staged from foundational papers like “The Effects of Anti-Vaccine Conspiracy Theories on Vaccination Intentions” (2014) to the sophisticated, cross-cultural instruments used in “Multinational data show that conspiracy beliefs are associated with the perception (and reality) of poor national economic performance” (2022). Item selection and wording pre-determine what qualifies as a symptom, while pre-set statistical rules enforce a one-factor structure. Cross-language validation then certifies the score as “comparable,” creating a standardised measurement chassis ready for global deployment, so downstream datasets inherit the design choice that diverse beliefs load on one underlying disposition.

- The Story Frame (AMCT): A standard explanatory wrapper—the Appraisal Model of Conspiracy Theories (AMCT)—maps pre-selected antecedents through appraisal pathways to endorsement and “harm” outcomes, formalised in twin Psychological Inquiry pieces in 2024 on “Applying Appraisal Theories to Understand Emotional and Behavioral Reactions to Conspiracy Theories” and “Highlighting Core Concepts and Potential Extensions”) led from Tübingen/Göttingen with Kent co-authors. This script dictates which questions are asked and which mediating relationships are tested, ensuring results consistently land where the framework predicts. The wrapper enabled the systematic categorisation of research into four standardised harm clusters—vaccines (18 publications), climate (12), prejudice (22) and radicalisation (16). Even before 2022, producing outputs such as “Climate change: Why the conspiracy theories are dangerous” (2015) and “Exposure to intergroup conspiracy theories promotes prejudice which spreads across groups” (2019), with meta-studies like “Conspiracy theories and climate change: A systematic review” (2023) sealing the case.

- The Causality Engine (RI-CLPM on Panels): Longitudinal models interpret correlated changes over time as evidence that “conspiracy mentality” causes subsequent behaviours. In practice, the “panel” backbone includes New Zealand multi-wave cohorts and a parallel computational stream that reads Twitter-sharing dynamics as downstream behavioural expression. These causal claims depend entirely on strong, often untested, assumptions about stability and measurement; if alternative model variants are used or these assumptions are relaxed, the reported size and even direction of effects can shift or disappear. Alternative specifications (cross-lag without random intercepts; fixed-effects; latent growth) materially change the estimates. Examples include: “A threat-complexity hypothesis of conspiracy thinking during the COVID-19 pandemic: Cross-national and longitudinal evidence of a three-way interaction effect of financial strain, disempowerment and paranoia” (2025) and “Does lower psychological need satisfaction foster conspiracy belief? Longitudinal effects over three years in New Zealand” (2024).

- Extensions: Adolescent versions of the scales extend the categorisation into educational settings, while machine-learning pipelines are layered on top to automate risk classification from the same item responses at a population scale.

Together, this methodological spine achieves a closed system. The mould creates a standardised diagnostic label, the story frame supplies its scientific rationale and the causality engine certifies its public risk. That pre-defined label is then projected—not discovered—across time, labs and countries via the data layer.

B. Data layer — how the made category is pushed at scale

With the mould set in the Methods spine, the network projects it through three coordinated channels—long panels, small lab studies and big cross-country runs—to generate standardised data at volume.

- Longitudinal surveys: Queensland and Australasian partners embed CMQ/GCB blocks into national panels—such as the New Zealand Attitudes and Values Study (NZAVS), used to track within-person change in belief and outcome measures over three years. The aim is to line up data waves so RI-CLPM models can claim that shifts in the “conspiracy mentality” score precede later behaviour.

Problems like participant drop-out or shifting question context are treated as statistical noise to be smoothed, applying constraints that enforce scalar/metric invariance even as contextual meanings shift. This infrastructure supported a documented production surge from 2021-2025, with studies like “Psychological Predictors of COVID-19 Vaccination in New Zealand’ (2022) and “The Interplay Between Economic Hardship, Anomie and Conspiracy Beliefs in Shaping Anti-Immigrant Sentiment’ (2025) demonstrating crisis-responsive manufacturing. - Boutique lab studies: Kent and German labs run tightly controlled experiments to test the levers of the AMCT story—threat primes, agency loss, norm cues. These studies, with titles like “Prevention is better than cure: Addressing anti-vaccine conspiracy theories’ (2017) and “Conspiracy beliefs and interpersonal relationships” (2025), generate the clean mediation figures required to populate the network’s harm ledgers. Authors like Jolley (vaccines), Green (prejudice) and Sutton (climate) specialise in distinct risk domains while maintaining theoretical coherence through Douglas’s central coordination. The methodological checks focus on procedure rather than premise—testing whether the script runs, not whether beliefs reflect reality.

- Multi-nation consortia: Brussels, Amsterdam and German teams push translated CMQ/GCB items across dozens of countries. Global expansion through projects like “Intentions to be Vaccinated Against COVID-19: The Role of Prosociality and Conspiracy Beliefs across 20 Countries” (2022) certifies the universality of the network’s diagnostic categories. Back-translation and statistical fit checks ensure the one-factor score “travels,” with non-invariant items iteratively removed until the template holds, then cross-national prevalence tables and invariance certificates that license global generalisation.

What this layer delivers

Together these channels form a validation mill: panels supply temporal claims, labs supply causal mechanisms and cross-country runs supply geographical reach. This system converted raw data into 68 precisely categorised publications (2021-2025), transforming survey responses into policy-ready risk assessments on a predictable schedule. The output is a continuous flow of standardised numbers—prevalence tables, change curves, country rankings—ready for assembly into diagnostic labels, harms ledgers and intervention toolkits in the Output Layer, with coordinated outputs across political, climate, interpersonal and computational domains arriving on cue.

C. Output layer — how numbers turn into diagnoses, harms and fixes

The validation mill converts data into policy products on a timetable aligned with ERC work-package milestones. The standardised numbers from the data layer are assembled into three saleable outputs—diagnostic labels, harms ledgers and toolkits—each engineered for policy uptake rather than open-ended inquiry.

- Diagnostic labels: The CMQ/GCB score is marketed to policy as a population-level risk indicator for a singular disposition called conspiracy mentality. Cut-points and percentiles transform a continuous index into clinical-sounding categories, while adolescent variants extend this diagnostic badge into educational settings. Between 2021-2025, these labels were operationalised across specialised clusters, with prejudice research (25 publications) becoming the dominant output stream. This expansion is documented in titles like “Belief in conspiracy theories and intentions to engage in everyday crime’ (2019) and “Denialist vs Warmist Climate Change Conspiracy Beliefs: Ideological Roots, Psychological Correlates and Environmental Implications’ (2025), demonstrating how the diagnostic framework systematically moved into community and social governance domains.

- Harms ledgers: Associations from panels and labs are collated into four standardised ledgers—vaccines (16 publications), climate (9), prejudice (25) and radicalisation (18)—and presented as definitive impact pathways. This accounting exercise tallies correlations as costs to public goals, visualised through graphics showing predicted drops in compliance or rises in hostility. The system produced studies like “Conspiracy theories: Their propagation and links to political violence” (2023, CREST Review) (radicalisation ledger), “Concern with COVID-19 pandemic threat and attitudes towards immigrants: The mediating effect of the desire for tightness’ (2021) (prejudice ledger) and “Extreme weather event attribution predicts climate policy support across the world’ (2025) (climate ledger). The accounting remains fundamentally one-sided, recognising only the “harms” of public belief while systematically excluding costs of institutional failure or policy coercion.

- Toolkits: Findings are packaged as ready-made interventions—prebunking scripts, inoculation exercises, norm-cue prompts—for communication teams and platforms. Efficacy is certified through short-horizon experiments measuring minor attitude shifts, which are then scaled into training modules and product guidelines. Intervention development evolved from basic laboratory studies to multi-national policy evaluations, with 2023-2025 outputs featuring machine-learning enhanced instruments and platform-ready scripts packaged under banners like “Practical recommendations to communicate with patients about health-related conspiracy theories’ (2022), “A call for caution regarding infection-acquired COVID-19 immunity: The potentially unintended effects of “immunity passports” and how to mitigate them” (2021) and “Prevention is better than cure: Addressing anti-vaccine conspiracy theories’ (2017), which prescribe exactly which behavioural levers to pull.

What this layer delivers

Outputs emerge as policy-ready artefacts: diagnostic labels to segment populations, harm ledgers to justify intervention in vaccines, climate or security domains and toolkits claiming to fix the manufactured problem. By the time these products leave the factory floor, they no longer read as academic arguments—they function as instructions and public health advisories, primed for the distribution relay that carries them into media and platform governance.

D. Distribution layer — how outputs become reality claims

The factory’s products don’t move themselves—distribution functions as the sales and marketing division that converts academic papers into public talking points and policy directives.

- University press offices: Kent and Queensland press teams turn manuscripts into kits—headline, bullets, graphic—and seed them to desks. The production system generates strategically timed outputs, with alarmist titles like “How to prevent conspiracy theories from influencing US election votes’ and “A wicked problem with tame solutions’ designed for easy translation into public-facing warnings about “who is vulnerable.” Success is measured in media pick-ups and altmetrics, which double as “impact” evidence for the next grant bid—completing one cycle as the next begins.

- The Conversation (and equivalents): Researchers rewrite complex papers into 900-word explainers co-edited for maximum reach. Charts are simplified, uncertainty minimised and intervention strategies foregrounded. These pieces are syndicated into mainstream outlets under Creative Commons, multiplying the message while preserving the original framing across platforms. Examples from Karen Douglas” pen include “The internet fuels conspiracy theories – but not in the way you might imagine” and “Conspiracy theories fuel prejudice towards minority groups”.

- Broadcast & press: Outlets like BBC, Huffington Post and Daily Mail use the press kits and explainers to produce standardised segments—typically featuring one expert, one graphic and one solution. The harms ledgers supply the dramatic stakes while the toolkits provide the seemingly straightforward remedy, with dissenters consistently framed through the diagnostic categories the network has manufactured.

- Platform & policy briefs: The same core messages are repackaged for government departments, tech platforms and NGOs as “what we know” and “what to do next.” Publication patterns show explicit alignment with funder priorities—security-focused findings are funnelled through policy-adjacent relays like the CREST Review through articles like “Conspiracy Theories: How are they adopted, communicated and what are their risks?’, while health compliance studies feed directly into public health bulletins and platform safety guidelines. Security-adjacent relays (like CREST venues; US bridge in political behaviour) carry the same messages into guidance and training.

What this layer delivers

Distribution standardises the public narrative as effectively as the methods spine standardises measurement: diagnostic labels become normalised, harms are dramatised and interventions are operationalised. This completes a closed loop where media metrics serve as impact evidence, fuelling the grant applications that finance the next production cycle—ensuring the factory’s continuous operation.

Round-Off — What The Line Builds

The map and ledger resolve to one finding—CONSPIRACY_FX sits at the centre of a vertically integrated line that standardises a diagnostic label, projects it at scale, then installs it in policy language. Funders specify the brief, hubs manufacture the measures, the methods spine locks the template, the data layer supplies volume, the output layer packages risk, the relay sells it to media and platforms. That is the operating model documented across Figures 1–4.

The practical consequence is structural path dependence—once the CMQ/GCB chassis and AMCT script are installed, almost every subsequent study inherits their assumptions and almost every “harm” ledger is an accounting exercise against those priors rather than a test of them.

The loop closes when press metrics return as “impact” to justify the next grant.

Next: Deconstructing key assumptions underlying the TISP scaffold

The next article in this Mindwars series will examine a paper covering the global Many Labs study Trust in Science and Science-Related Populism (TISP). Specifically, six embedded premises in its architecture that pose: 1) science as monolith, 2) trust as default, 3) dissent as pathology, 4) perception over conduct, 5) a coherent “science-related populism” and 6) communication over reform. The frame is simple and severe: treat TISP as governance technology and ask whose legitimacy it manufactures and at what public and institutional cost.

Published via Journeys by the Styx.

Mindwars: Exposing the engineers of thought and consent.

—

Author’s Note

Produced using the Geopolitika analysis system—an integrated framework for structural interrogation, elite systems mapping, and narrative deconstruction. Assistance from Deepseek for composition and editing.