Mindwars: From CONSPIRACY_FX to Perceptions of Science

A look at how an apparently neutral report on attitudes to science across 68 countries shapes a technocratic worldview that pathologises dissenting voices.

What this article examines. In Scientific Data (2025), Mede, Cologna, Berger, Besley, Brick, Joubert, Maibach, Mihelj oreskes, Schäfer and a large consortium present the TISP data descriptor: a Many Labs survey of ~72,000 respondents across 68 countries (collected Nov 2022–Aug 2023) measuring trust in scientists, science-related populist attitudes, views on science’s role in society and policy-making, science-media use and communication behaviour and climate attitudes/policy support. The version used here was downloaded from the University of Kent’s Academic Repository (KAR). Our analysis interrogates how this descriptor’s framing, author economy and platform rails shape the worldview it projects—and how that matters for downstream policy and public discourse.

Why This Report Came Into Focus

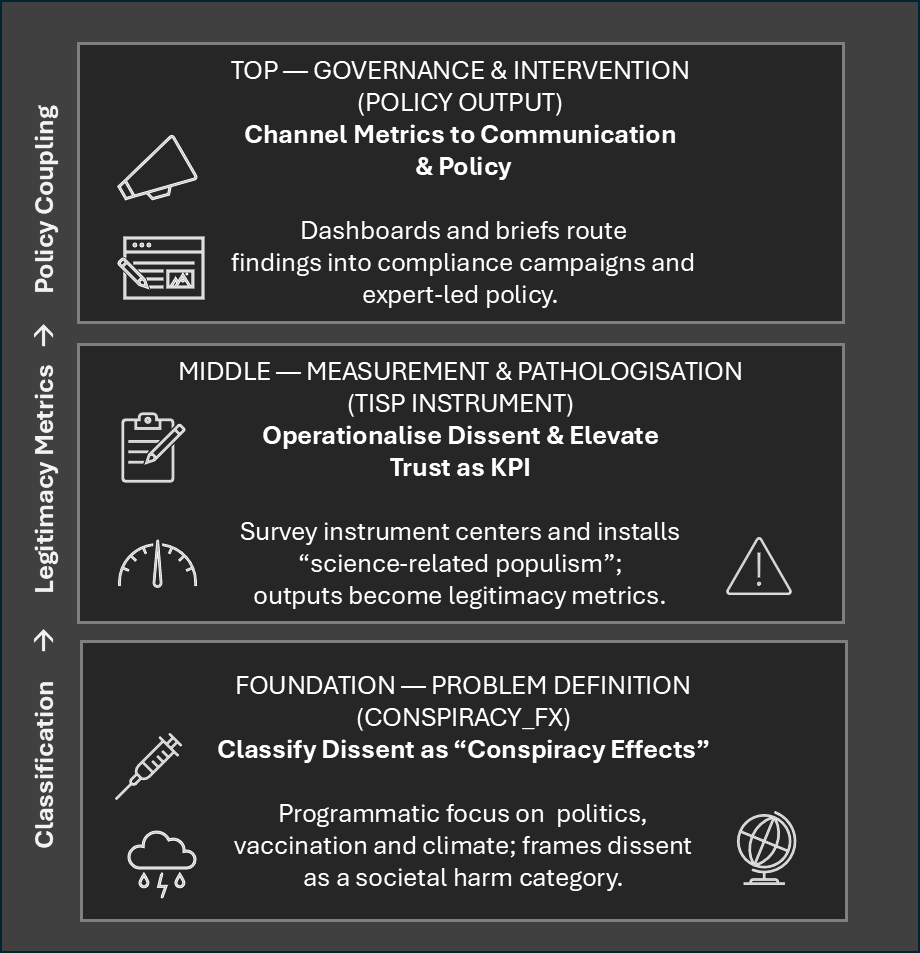

The TISP report came into focus because its author roster intersects with the organised “conspiracy theorist” research stack examined in previous Mindwars articles: specifically, the ERC-funded CONSPIRACY_FX programme directed by Prof. Karen Douglas of University of Kent. That stack’s core brief is to study the effects of conspiracy beliefs across politics, vaccination and climate, while TISP operationalises trust and “science-related populism” at global scale—two halves of the same governance frame.

The linkage in plain view

- CONSPIRACY_FX mandate. Douglas’s project is explicitly scoped to measure and manage the consequences of conspiracy theories, with climate named as a key arena. This is the definitional tower for classifying dissent as harmful effects.

- TISP’s declared scope. The Harvard-hosted TISP Many Labs study measures trust in scientists and science-related populist attitudes across countries and routes findings toward policy relevance—especially climate.

- Kent tie-in. The TISP dataset descriptor is hosted in the University of Kent’s repository, underscoring institutional overlap with the CONSPIRACY_FX network.

Why the overlap matters

- Frame continuity. CONSPIRACY_FX problematises “conspiracy effects”; TISP’s instrument then codifies dissent via a labelled construct— “science-related populism”—and pairs it with policy support questions (climate menu). The net effect is a pipeline from classification → legitimacy metrics → policy coupling.

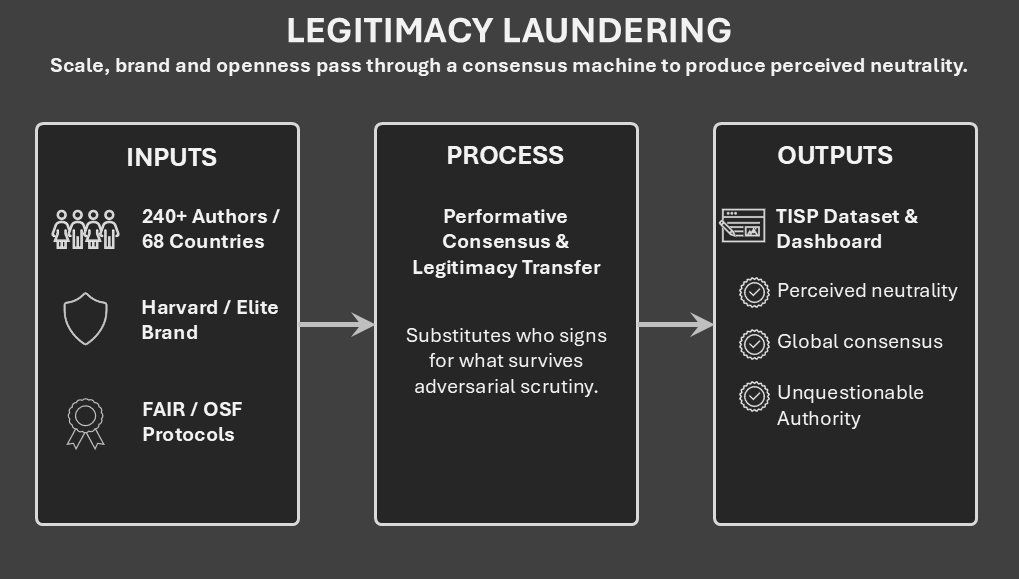

- Legitimacy laundering by coalition. TISP advertises a very large, geographically distributed author group; co-authorship for fielding amplifies consensus optics while the core constructs remain centrally governed. That architecture substitutes who signs for what survives adversarial validity tests.

The reading is structural, not personal

TISP isn’t an isolated dataset; it functions inside a conspiracy-effects governance stack. One tower (CONSPIRACY_FX) develops the categories and narrative (“conspiracy” as harm in politics/vaccines/climate). Another tower (TISP) measures deference (trust) and pathologises dissent (“science-related populism”), then platformises the frame via dashboards and co-authorship optics. Together they stabilise a technocratic/noocratic worldview where disagreement is a risk state to be managed rather than a signal for institutional self-audit. This worldview replaces public debate and political negotiation with pre-determined, expert-led solutions, where the only legitimate public role is to assent.

Setting the TISP Scene — Background, Source, Setup and Author Architecture

The backdrop isn’t mild “public confusion”; it’s a legitimacy crisis inside the knowledge system itself—replication failures, publication bias, p-hacking/HARKing, prestige-driven editorial filters and politicised peer review. John Ioannidis’ metascience literature made the core indictment explicit: under current incentives, large swathes of published findings are fragile and journals often reward narrative conformity over adversarial robustness. Into that breach, TISP arrives as a neutral-looking data descriptor (global samples, preregistration, FAIR/OSF, dashboard). But its instrument design—centring trust and science-related populism—and its platform rails (Many Labs consortium, global roster) reframe the crisis as a deficit of public deference rather than a deficit of institutional performance. By co-positioning a climate-policy menu with these constructs, TISP channels interpretation toward policy compliance while leaving process audits (COIs, error-correction latency, retraction visibility, back-casts, minority reports) offstage.

Leaders, advisory board and the Harvard anchor

Who runs TISP. The project is led by Viktoria Cologna (main lead; Postdoctoral Fellow at Harvard during TISP; now at Collegium Helveticum) and Niels G. Mede (second lead; IKMZ, University of Zurich). An advisory board concentrates brand capital from science-communication and climate-attitudes hubs: Sebastian Berger (Bern), John Besley (Michigan State), Cameron Brick (Amsterdam), Marina Joubert (Stellenbosch), Edward W. Maibach (George Mason), Sabina Mihelj (Loughborough), Naomi Oreskes (Henry Charles Lea Professor of the History of Science, Harvard), Mike S. Schäfer (Zurich) and Sander van der Linden (Cambridge).

Why the Harvard context matters. The leadership profile explicitly situates TISP within Harvard-linked networks (lead’s Harvard postdoc; Oreskes as Harvard-based sponsor/board member), which functions as a reputational spine for the consortium’s outputs and public-facing messaging. The official welcome/overview materials foreground the global scope and FAIR/OSF rails—positioning TISP not just as a dataset, but as a platform under prestigious institutional colours.

How this plugs into the frame.

- The elite-institution anchor (Harvard) + star advisors transfer authority to the instrument design (trust + “science-related populism”), reinforcing the technocratic posture diagnosed above.

- The consortium optic still substitutes who signs for what’s falsifiable until invariance/DIF, weight-sensitivity and institutional-performance audits sit alongside the trust batteries.

In these terms, TISP’s leadership and advisory mesh are not mere acknowledgments; they are legitimacy engines—a Harvard-centred brand scaffold that amplifies the instrument’s default worldview unless counter-balanced by published robustness cards and process-symmetry measures.

What the TISP report says it’s about

TISP presents a pre-registered, cross-sectional survey (~71,922 respondents; 68 countries, 37 languages) to measure: (1) trust in science/scientists, (2) science-related populist attitudes, (3) views on science’s role in society/policy, (4) science-media use and communication behaviour, plus climate attitudes/policy support. Materials and data are hosted on OSF; a public dashboard is promoted; FAIR is foregrounded as the legitimacy stack.

Why that matters. A “neutral” global descriptor becomes a steering mechanism: by centring trust and science-related populism—then co-positioning a climate policy menu—the instrument channels uptake along a normative path (deference → policy support), amplified by FAIR/prereg and platform optics. The remedy is not to shrink the survey; it’s to fork the instrument (language invariance/DIF and weight-sensitivity first) and add institutional-performance batteries alongside trust. A truly symmetrical instrument would pair ‘trust in scientists’ with questions about ‘perceived scientific integrity’ (e.g., frequency of data manipulation, susceptibility to corporate funding). It would pair ‘science-related populism’ with items measuring ‘perceived institutional arrogance’ or ‘concern about the silencing of dissenting views within science.’

How It Is Set Up: Rails, Not Just Results

- Many Labs consortium with 88 post-hoc weighted samples; global coverage beyond “WEIRD”; explicit aim to inform policymakers and science-communication practitioners.

- FAIR/OSF + dashboard function as method warrants and distribution rails—good practices, but here they double as authority signals unless paired with robustness cards (invariance, weights) before cross-country aggregation.

Who Are the Authors (and What the Author List Does)

The TISP report’s author list encompasses ~240 names from 170+ institutions across all continents (68 countries, 37 languages). This long list doesn’t just document collaboration; it manufactures consent optics while performing an authorship-for-fieldwork model as an in-kind payment that buys both data and performative consensus: hundreds of names across 68 countries create the appearance of neutrality while the core constructs remain centrally governed.

From this perspective, until contribution roles, invariance/weight tests and adversarial analyses are published alongside the wall of names, the project’s author architecture can be seen to function as legitimacy laundering within an academic authorship economy more than as a validity guarantee. Realistically, no paper of just 13 pages effective content is authored by some 240 people; the two leads were undoubtedly responsible for writing the paper itself, while the remainder merely acted as nodes within the survey outreach.

The World View Revealed — Why Six Assumptions Matter

A critical read of the Abstract and the Background and Summary section of the paper reveals that TISP doesn’t just take the temperature of public opinion; it chooses the climate of meaning in which opinion is judged. In a system-wide legitimacy crisis (replication failures, politicised review, COIs, lived-reality mismatch), the paper reframes the problem as public deference rather than institutional performance. That reframing rests on six load-bearing assumptions that quietly police the boundary between “legitimate concern” and “illegitimate dissent,” and then couple the approved attitudes to a narrow climate-policy menu.

Read the next section as a structural x-ray, not a motives hunt. Each assumption is a gate: it sets the evaluative frame, routes disagreement and prefigures policy uptake. Surface quotes are followed by a short “Illustrates” read to make the mechanism explicit. Together, they show how a neutral-sounding data descriptor becomes a governance instrument—and where pluralised measurement (forks, robustness cards and institutional-performance audits) must be inserted to break the seal.

Assumption 1: Science is a monolithic and unquestionable authority

Throughout the text, the authors treat Science as a singular, unitary actor that dispenses “the best available knowledge,” and recasts the legitimacy problem as insufficient public trust. Faced with a system-wide legitimacy crisis—marked by replication failures, politicised review and lived-reality mismatches—the paper’s diagnosis is telling: the problem isn’t the institutions, it’s the people. Loss of legitimacy is framed as a civic hazard, not as a potentially warranted response to error, conflict of interest, politicised review or failed predictions within scientific institutions. This can be seen in lines such as:

“Scientific evidence and expertise are fundamental to society. They can inform policy-making, individual decision-making and public discourse… to effectively fulfil this role, scientists need… to be perceived as trustworthy by the public. Otherwise science will lose legitimacy and thus be limited in its capacity to provide the best available knowledge to society.”

Mechanism (how the assumption is built into the paper):

- Monism by grammar: “science” appears as one voice; “scientists” are its emissaries. Method (fallible, provisional) fuses with institution (journals, agencies) and with outcomes (“best available knowledge”).

- Legitimacy as perception: the operative dependency is be perceived as trustworthy—a trust KPI—rather than be audibly accountable (error-correction latency, COI discipline, retraction visibility).

- Problem redefinition: the crisis shifts from process integrity to public attitude; the instrument then measures attitudes (trust, “science-related populism”) rather than auditing the process that produced distrust.

This foundational assumption produces several critical structural effects:

- Authority laundering: Procedural signals (FAIR/prereg, large consortium) plus the trust KPI substitute for validation (cross-language invariance, weight robustness, track-record audits).

- Boundary creep: Democratic questions about where expertise ends and politics begins are recoded as threats to epistemic authority.

- Dissent compression: Citizens’ boundary-setting (“don’t legislate my life by expert decree”) is gravitationally pulled toward pathology buckets (populism/misinformation).

What falls out of frame:

- Institutional performance metrics: COI handling, correction/retraction practice, minority-report protocols, whistleblower protection and back-casts (what was forecast vs what happened) are not measured alongside trust.

- Plural knowledges: Local risk, cost–benefit and institutional track record as alternative mediators of policy support are underweighted or absent.

As written, the paper treats legitimacy as something the public must grant; a scientific culture worthy of that legitimacy treats it as something institutions must earn—audibly, measurably and alongside any trust score. This inversion is the paper’s core normative commitment: it treats legitimacy as a debt the public owes to institutions, rather than the idea that institutions must continually earn the public’s trust through ongoing demonstrable integrity.

Assumption 2: Trust in institutions is the default, healthy position

The paper performs a crucial conflation: it places legitimate democratic boundary-setting—concerns about experts “illegitimately intruding” in policy and personal life—in the same threat category as “populist claims.” The effect is to treat constitutional checks on expert jurisdiction as a pathology, folding principled constraint together with demotic rhetoric. This can be seen in phrases like:

“They suggest that the epistemic authority of science has been challenged by: … concerns about scientists illegitimately intruding in policy-making, public debate and people’s personal lives … and populist claims about academic elites disregarding common sense …”

Mechanism (how the assumption is engineered):

- Equivalence construction: Heterogeneous phenomena—normative concerns about expert overreach and “populist” anti-elite talk—are grammatically yoked as co-equal assailants of “epistemic authority.” The pairing contaminates the first with the stigma of the second.

- Default norming: The healthy baseline is pre-defined as trust; any deviation is framed as a “challenge.” This shifts the burden of justification from institutions (which must demonstrate their worth) to the public (which must explain its reluctance).

- Role-expansion cue: Because the “challenge” is framed as a public attitude problem, the implied solution naturally tilts toward granting experts more influence in policy, pre-validating survey items that ask for increased scientist control.

Structural effects in the instrument:

- Boundary debates recoded as deviance: Respondents who endorse strict lines between expertise and law-making are structurally categorised as low-trust or adjacent to “populism.”

- Jurisdictional creep by survey design: Questions about scientists’ role in government are anchored to a frame where limits are already suspect; responses defending those limits depress the “healthy” metric.

- Attitude→policy funnel: Once boundary-setting is deemed a “challenge,” coupling trust questions to a climate-policy menu automatically frames institutional caution as policy obstruction.

What drops out of frame:

- Democratic sovereignty: The fundamental question of whether unelected experts should wield decision-making power on value-laden issues is not treated as a legitimate axis of disagreement.

- Domain specificity: The framework assumes the same trust posture should apply from technical consensus to personal-life regulation, erasing contexts where public restraint of expertise is a virtue.

- Institutional history: Past episodes where “expert” mandates produced documented harm are not available as rational grounds for present-day limits; they are re-cast as public “attitude defects.”

This is achieved through a specific rhetorical choreography: “Illegitimate intrusion” is listed alongside “populist claims,” coded as a “challenge,” which then invites prescriptions for “communication” aimed at raising deference, not for negotiating the proper scope of expert power. The pairing implicitly assigns a moral valence: deference is virtuous, while limits are suspect.

The ultimate measurement consequence is that trust is transformed from a descriptor into a normative yardstick for civic virtue. The instrument is designed so that a respondent’s insistence on democratic limits to expert authority is automatically registered not as a constitutional preference, but as an epistemic deficiency.

Assumption 3: Dissent is a pathological “attitude” to be managed

The paper explicitly places “science scepticism” in the same category as climate change itself, framing it not as a potential signal but as a direct “societal challenge” to be “addressed” by institutional actors. Dissent is thereby re-cast from a political reality into a managerial problem.

“Such evidence can facilitate recommendations for policymakers, educators, science communication practitioners and other stakeholders on how to address societal challenges such as science scepticism and climate change.”

Mechanism (how the assumption is engineered):

- Problem Coding: Scepticism is defined as a public condition, never as a potential symptom of institutional failure or a legitimate epistemological stance.

- Managerial Cast: The active verbs (“facilitate,” “address”) and the list of professional actors (“policymakers… educators… communication practitioners”) map dissent directly onto a professional intervention workflow.

- Moral Co-location: By rhetorically pairing “scepticism” with the urgent, physical problem of “climate change,” the paper aligns attitude correction with moral and policy necessity, pre-emptively closing down space for procedural or substantive debate.

Structural effects in the instrument:

- Target Acquisition: The attitude batteries (trust, “science-related populism”) function not to understand but to identify which populations and beliefs require modification.

- Homogenisation of Motives: The instrument flattens a wide spectrum of disagreement—from cost-benefit analysis and conflict-of-interest concerns to methodological critique—into a single, pathological “scepticism” score.

- Compliance Pathway: Once scepticism is framed as a “challenge,” the analytical pathway is set: lower scepticism scores become the primary metric of “success,” predisposing the entire research program toward measuring compliance.

What drops out of frame:

- Institutional Causality: Potential rationales for dissent rooted in institutional performance—poor conflict-of-interest discipline, slow error-correction, a history of inaccurate forecasts—are excluded as explanatory variables.

- Legitimate Boundary-Setting: A citizen’s preference for keeping expert advice advisory, not binding, is treated as an attitude deficit, not a constitutional principle.

- Contextual Reasoning: Scepticism that is specific to a particular policy, claim or context is recoded as a global character trait.

The rhetorical choreography here is that the sequence is a closed loop: “Evidence” → “recommendations” → “stakeholders” → “address scepticism.” This pipeline moves directly from measurement to management, systematically bypassing any step that would inspect institutional performance as a potential cause. The citizen is positioned as the subject of intervention; the expert, as the operator.

The ultimate measurement consequence is that the dataset does not merely describe a landscape of belief; it produces a high-resolution targeting map for trust-elevation and persuasion campaigns. By its very design, it transforms disagreement from a diagnostic signal about the health of the science-society relationship into a variable to be reduced.

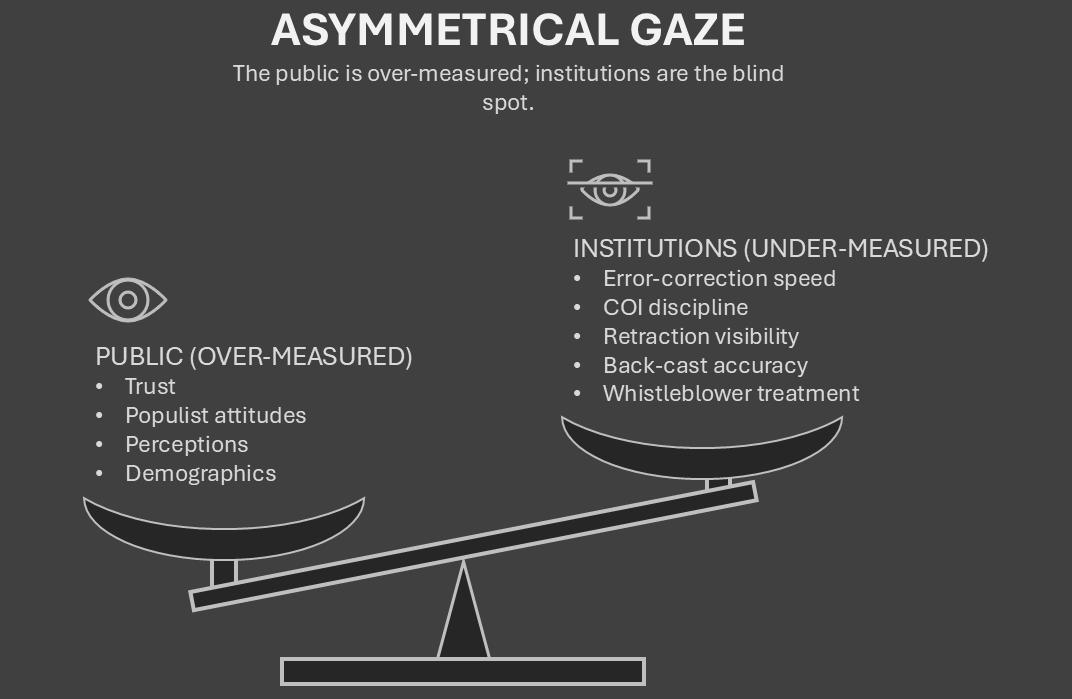

Assumption 4: The problem lies with public perception, not institutional conduct

The paper’s entire methodological gaze is fixed on public attitudes—trust, “science-related populism,” perceptions—while the conduct and performance of scientific institutions remain unmeasured. The legitimacy crisis is instrumented exclusively as a matter of what people think, never as a consequence of what institutions do.

“We investigated these concerns with a global, pre-registered, cross-sectional online survey of N=71,922 participants... The survey measured individuals’ (1) trust in science and scientists, (2) science-related populist attitudes, (3) perceptions of the role of science...”

Mechanism (how the assumption is engineered):

- Operational Focus: Every primary construct targets the dispositions of individuals. There are no parallel constructs measuring institutional behaviours such as error-correction speed, conflict-of-interest discipline or retraction practices.

- Unit of Analysis Lock: The individual respondent is the sole analysable object. Institutions like “science” or “scientists” appear only as vague reference categories, not as entities whose measurable practices could be correlated with public trust.

- Pre-registration Optics: The emphasis on procedural hygiene (pre-registration, large-N) frames the study as rigorously neutral, while this very framework systematically excludes institutional-process variables from the measurement map.

Structural effects in the instrument:

- Causal Asymmetry: The research design hunts for all explanatory variance in public psychology, making variance in institutional performance structurally invisible and thus irrelevant to the analysis.

- Attribution Funnel: Any downstream statistical model will inevitably attribute policy support or resistance to individual attitudes (trust/populism), because these are the only high-resolution predictor variables the instrument collects.

- Normative Gravity: High trust is pre-set as the healthy baseline. Lower trust is automatically framed as a deviation to be explained by demographic or psychological flaws in the populace, rather than by potentially poor institutional behaviour.

What drops out of frame:

- Process Integrity Metrics: No data on institutional correction latency, COI handling, transparency, the treatment of minority reports, whistleblower protections or the accuracy of past predictions.

- Jurisdictional History: Documented episodes of expert overreach or politicisation that would provide a rational, historical basis for depressed trust.

- Contextual Mediators: Local risk exposures, implementation costs or public service reliability—factors that can shape attitudes toward scientific mandates for entirely rational, non-ideological reasons.

Rhetorical Choreography: The argument proceeds via a telling sequence: “Concerns” → “global survey” → “measured individuals’ trust/populism/perceptions.” This chain seamlessly recodes a system-level legitimacy problem—which could stem from institutional failure—into a deficit located within the attitudes of the survey respondents.

The ultimate measurement consequence is that the dataset does not merely describe public opinion; it produces a high-resolution map of deference. Because institutional conduct is an unmeasured blank, any observed scepticism is primed to be interpreted as public misalignment or pathology. The inevitable prescription will be to “fix” public attitudes, while the question of whether institutions themselves need fixing is systematically engineered out of the conversation.

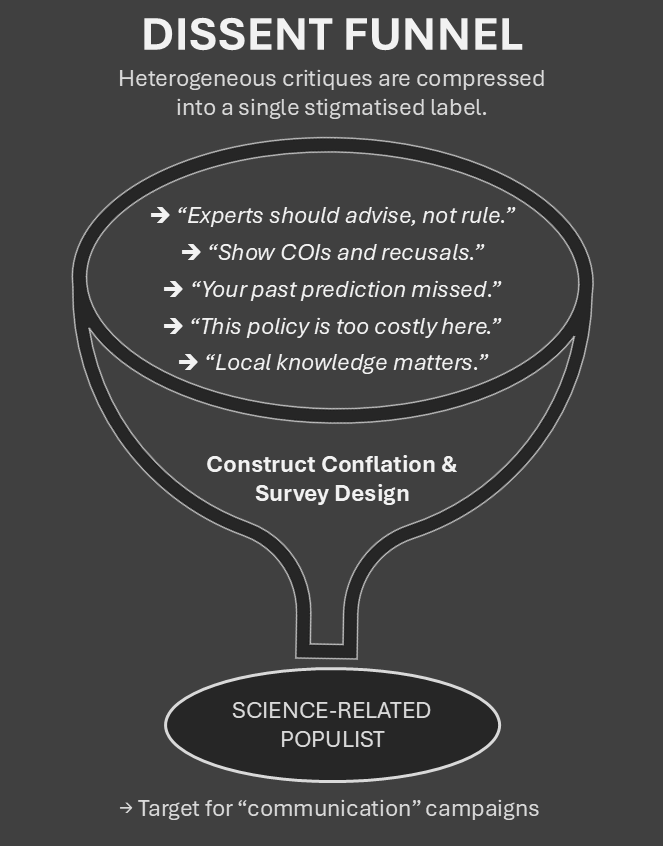

Assumption 5: “Science-related populism” is a coherent and dangerous category

The paper installs the construct of “science-related populist attitudes” as a central pillar, performing a conceptual sleight-of-hand that transforms a wide spectrum of criticisms—from democratic boundary-setting to accountability demands—into a single, measurable pathology.

“The survey measured individuals’... (2) science-related populist attitudes... including trust in scientists and science-related populist attitudes as well as relevant correlates...”

Mechanism (how the assumption is engineered):

- Category Creation → Category Control: The scholarly network coins a bespoke label and immediately operationalises it within its own global instrument. This circular process graduates a contested concept from a theoretical hypothesis to an accepted taxonomic fact.

- Conflation by Adjacency: The construct is strategically placed alongside “trust” and “policy support” in the survey. This design ensures that whatever triggers the “populism” items will automatically correlate with lower “trust” and predict policy resistance, creating a self-fulfilling explanatory arc.

- Homogenisation of Dissent: The scale collapses a heterogeneous range of motives—from legitimate cost-benefit concerns and methodological critiques to alarms over institutional conflicts of interest—into a single, unified syndrome.

Structural effects in the instrument:

- Pathology Framing: A high score on the scale types an individual as attitudinally deviant. The mere existence of the “science-related populism” metric frames certain critiques as inherently dangerous and in need of management.

- Self-Sealing Loop: Subsequent statistical models will naturally attribute policy resistance to this “populist defect,” because the instrument provides no variables to measure the alternative explanation: flawed institutional behaviour.

- Moral Coloration: The label deliberately borrows the negative valence of “populism” from political discourse and transfers it to science-governance disputes, pre-emptively coding certain critiques as low-status and illegitimate.

What gets bundled under the label—the construct indiscriminately lumps together:

- Elite-sceptic narratives (concerns about academic-political entanglement)

- Experiential weighting (valuing lived experience or local knowledge)

- Jurisdictional limits (arguments for keeping expert roles advisory)

- Accountability demands (for transparency, COI discipline, minority reports).

All of these can be rational, democratic stances. The construct architecture treats them not as distinct positions, but as symptoms of a single disorderly attitude.

The rhetorical choreography here entails framing the process as a closed circuit: Label → Items → Score → Correlation with low trust → Narrative of risk. The initial definitional act manufactures the very syndrome that the subsequent analysis claims to discover.

The ultimate measurement consequence being once canonised, “science-related populism” functions as a diagnostic funnel. It takes specific, contextual disagreements and recasts them as evidence of a general character flaw in the populace. This legitimises targeted “communication” and “trust-building” campaigns aimed at the public, while systematically keeping questions of institutional integrity and performance out of the analytical frame.

Assumption 6: The solution is better “communication,” not reform

The paper explicitly channels its findings toward tooling “communication practitioners” to “address” scepticism. This frames the core societal task as one of message delivery and public adjustment, systematically excluding the alternative: scrutiny and reform of institutional conduct or knowledge-production pipelines.

“Such evidence can facilitate recommendations for… science communication practitioners and other stakeholders on how to address societal challenges…”

Mechanism (how the assumption is engineered):

- Operator Selection: The named end-users are “policymakers, educators, science-communication practitioners”—all actors whose profession is to manage public audiences, not to audit institutional conflicts of interest, error-correction or governance.

- Verb Discipline: The active language— “facilitate,” “recommendations,” “address”—signals a closed, managerial workflow: diagnose attitudes → craft messages → implement public interventions. The presumed locus of change is the citizenry, never the institution.

- Endpoint Framing: By defining “science scepticism” as a “societal challenge” akin to a physical problem, the paper pre-selects communication as the logical remedy for public deviation, rather than treating scepticism as potential feedback signalling a need for process improvement.

Structural effects in the instrument:

- Pipeline Lock: The measures of trust, “science-related populism,” and policy support generate data that segments the population for targeted outreach. They do not produce variables that could implicate or critique institutional practice.

- Openness Re-tasked: The FAIR/OSF principles and the public dashboard are presented alongside communication aims, making them appear less like tools for adversarial audit and more like technologies for building trust—using transparency as persuasion collateral.

- Policy Adjacency: Because public attitudes are measured alongside a specific climate-policy menu, any “success” in communication is automatically legible as movement toward policy compliance, not as an achievement of greater institutional accountability or public deliberation.

What drops out of frame:

- Process Accountability: Metrics for institutional health—error-correction speed, COI handling, retraction visibility, minority-report protocols—are absent from the framework as potential objects of change.

- Bidirectional Dialogue: The implied communication model is strictly one-way (institution → public). There is no mechanism for public critique to loop back and re-shape institutional behaviour.

- Substantive Input: Scepticism grounded in legitimate, contextual concerns like local costs, specific risk exposures or past service failures is flattened into a “messaging problem,” rather than treated as substantive input for better policy.

The rhetorical choreography sees the argument proceeding via a seamless sequence: Evidence → recommendations → practitioners → address scepticism. This chain cleanly converts political and epistemological disagreement into a managerial problem—a “to-be-treated” attitude—while positioning the institutional structures that might provoke such disagreement firmly outside the zone of intervention.

The ultimate measurement consequence being the dataset does not serve a democratic dialogue; it functions as a targeting and optimisation map for compliance campaigns. It identifies who to reach, with what frames, to nudge them toward which policy endpoints. In this configuration, “science communication” becomes the technical instrument that preserves institutional continuity, while the concept of “reform” remains permanently offstage.

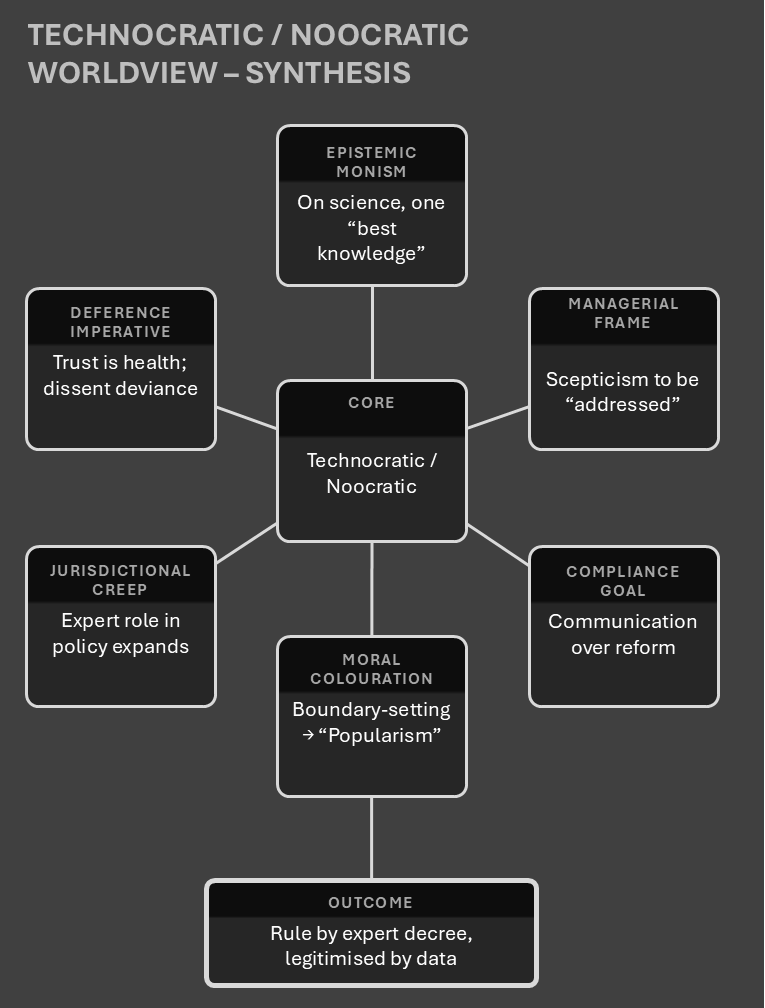

Underlying Political Project: The Technocratic Worldview

The TISP project operates from a coherent but unstated political philosophy—a technocratic worldview that systematically reengineers the relationship between science, society and democracy. This worldview becomes visible when we examine its operational components:

Epistemic Monism as Default Position

The foundational creed appears in the paper’s warning:

“Otherwise science will lose legitimacy and thus be limited in its capacity to provide the best available knowledge to society.”

This single sentence encodes the entire framework:

- A singular “Science” exists as a unitary voice delivering pre-validated “best available knowledge” —not a contested field or battle of ideas, hypotheses and rebuttal

- Legitimacy is treated as a resource science must retain, not something institutions must continually earn

- The public’s role is reduced to reception, not participation or critique

- The crisis is framed as public reluctance, not institutional fallibility, corrupt practices or systemic blindness.

The Measurement Trap

The data descriptor genre provides the perfect vehicle for this worldview. By presenting itself as merely “measuring attitudes,” the project embeds its political assumptions as neutral categories:

- Trust becomes the key performance indicator for societal health

- “Science-related populism” provides the pathology label for dissent

- Together, they transmute political disputes about values and jurisdiction into psychological gradients of deference.

Jurisdictional Expansion by Stealth

The survey design performs a subtle but crucial jurisdictional expansion:

- Questions about scientists’ role in policy are fielded within a frame where boundary-setting is already listed among “challenges” to authority

- The empirical posture of “measuring attitudes” smuggles in the normative move of expanding expert jurisdiction

- Co-locating trust/populism measures with a specific climate policy menu creates an interpretive funnel where scepticism automatically registers as unreasonable resistance.

The Legitimacy Machinery

The project employs sophisticated legitimacy-building techniques:

- FAIR/preregistration/OSF protocols function as “legitimacy rails,” using “openness” as insulation against deeper validity questions

- The massive, globally distributed author list manufactures consent optics through authorship-for-fieldwork arrangements

- Prestige anchors (Harvard, elite institutions) transfer brand capital to the constructs, making “who signs” a substitute for rigorous falsification.

Threat Manufacturing and Moral Colouring

The framework systematically colours dissent with negative moral valence:

- Boundary defence, cost objections and institutional critiques are narratively positioned alongside “misinformation” and “populism”

- This creates a moral gradient where democratic oversight acquires the coloration of epistemic vice

- Value conflicts become reinterpreted as attitude defects to be corrected.

The Governance Outcome

The ultimate result is technocracy drifting toward noocracy—rule by experts:

- Expertise becomes normatively primed to steer policy, not merely advise it

- Citizens appear as population segments to be adjusted through communication campaigns

- “Openness” and “communication” become tools of continuity management rather than levers for institutional reform

- The political field is narrowed ex ante by measuring how much the public should defer, not whether institutions have earned it

- Democratic governance and participation are replaced by stakeholder consultations and surveys.

This worldview represents a fundamental inversion of democratic accountability: rather than institutions earning legitimacy through demonstrable integrity, the public bears the burden of demonstrating adequate deference. The measurement instrument becomes the vehicle for enforcing this inversion while maintaining the appearance of neutral social science.

Conclusion — Keep the Data, Break the Seal

TISP arrives dressed in the garb of neutrality—global samples, preregistration, FAIR/OSF protocols, a public dashboard. But its architecture is fundamentally political. The instrument centres trust and pathologises dissent as “science-related populism,” co-positions these metrics with a narrow climate-policy menu and scales this frame globally through a prestige consortium. In an era of systemic legitimacy crisis, this configuration actively performs a technocratic worldview: authority flows downward, dissent is recoded as an attitude defect and “openness” functions as insulation against audit rather than a means to enable it.

Rejecting this framework does not require throwing away the dataset. It requires naming the instrument’s hidden functions:

- The descriptor doesn’t merely describe public opinion; it decides what counts as legitimate opinion.

- The author list doesn’t merely credit contributors; it manufactures an optical illusion of consensus.

- The dashboard doesn’t merely share findings; it standardises and exports a specific political lens.

Read in this light, TISP’s political project comes into sharp focus: a governance system that meticulously measures public deference and routes it toward policy compliance, while systematically leaving the performance and integrity of institutions off the balance sheet.

The minimal test for intellectual honesty in such an endeavour is simple: treat legitimacy as something institutions must earn through demonstrable integrity, not as a debt that publics owe.

The path forward is clear. Keep the data. Break the seal.

FAQ:

- Q: Are you denying climate risk?

A: No. The reality, or otherwise, of climate change not the point. The article shows how a survey architecture turns trust into a compliance proxy and leaves institutional audit offstage. - Q: Why harp on Harvard?

A: Because brand capital is used as evidence, it’s about mapping how that functions. - Q: What would fix this?

A: Invariance/weights/back-casts and an institutional performance battery published alongside the trust metrics.

Further reading:

- TISP — Trust in Science & Science-Related Populism (official site). Project hub, overview, people, and publications. tisp-manylabs.com

- CONSPIRACY_FX (Univ. of Kent). Karen Douglas’s ERC project on the effects of conspiracy beliefs across politics, vaccination, and climate. Research at Kent

- Technocracy News & Trends (Patrick Wood). Long-running critical archive on technocracy, scientism, and governance-by-expert systems. technocracy.news

- “What is Epistocracy?” (open-access chapter, Brill). A clear academic entry point to knowledge-based rule (epistocracy/noocracy) and its fault lines. brill.com

- Nick Land — The Dark Enlightenment. The foundational (and contentious) essay for NRx/“dark enlightenment” debates. Read critically.

Published via Journeys by the Styx.

Mindwars: Exposing the engineers of thought and consent.

—

Author’s Note

Produced using the Geopolitika analysis system—an integrated framework for structural interrogation, elite systems mapping, and narrative deconstruction. Assistance from Deepseek for composition and editing.