Overlords: Part 11. Technocratic Custodianship

From dynastic schema to algorithmic rule

Parts 1–10 of the Overlords series traced the architecture of rule as it mutated across epochs: from ritual theatre masking procedural control, through the compliance stacks of code and personhood, to the dynastic custodianship that consecrated legitimacy across centuries. Each stage showed endurance, but each carried its own fracture: rituals exposed as theatre, capital revealed as conditional, dynastic immunity pierced by scandal.

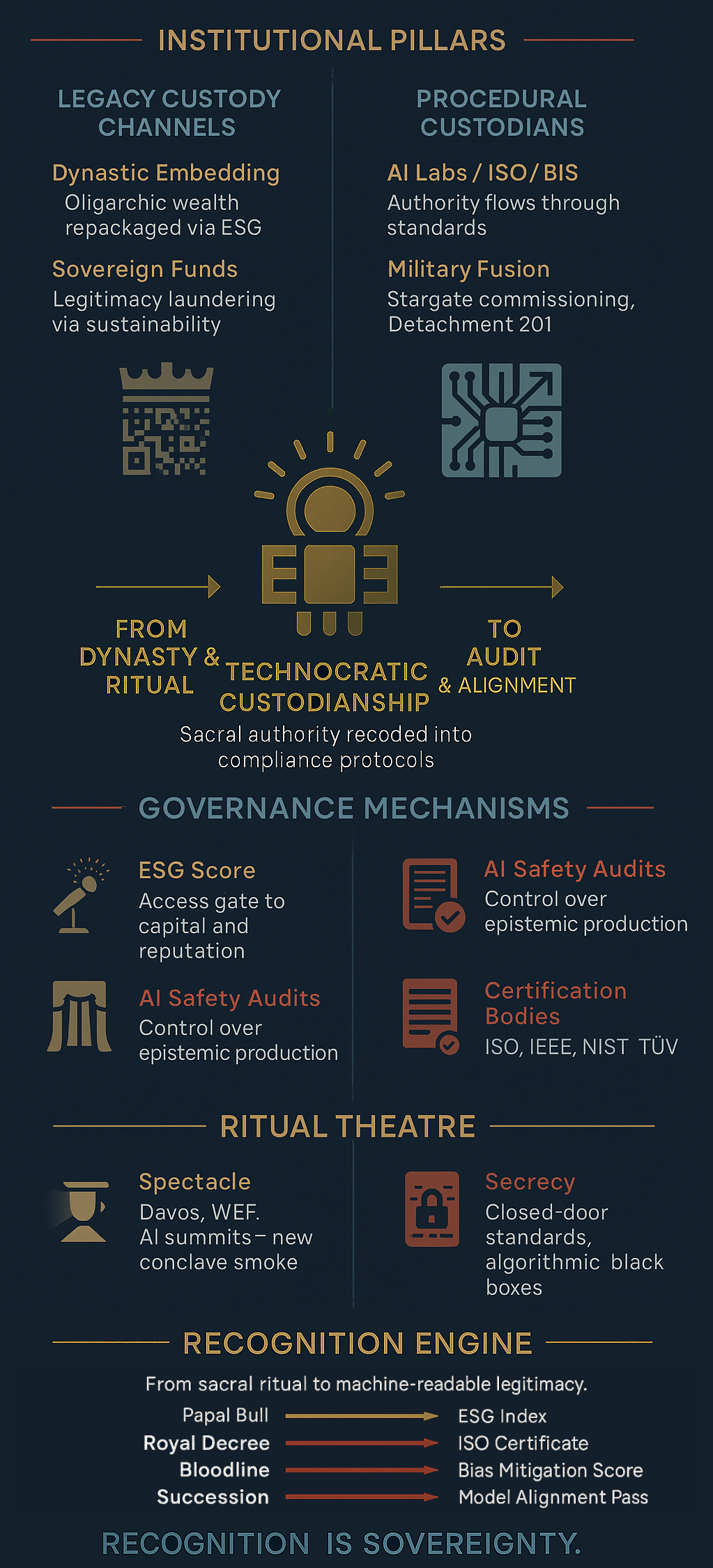

The present stratum is no different. Dynasties persist, but governance is mutating into a post-dynastic form where legitimacy is no longer inherited but coded. Authority is consecrated not by lineage or treaty but by standards, audits, and algorithmic consensus. ESG frameworks, AI safety protocols, CBDC pilots, and ISO/IEEE standards function as the new rites of inclusion—procedural acts that secure continuity through metrics rather than bloodlines. These metrics are not uniform: ESG matrices diverge across jurisdictions, AI governance splits between OECD and CPC-led standards, and CBDC pilots in Nigeria or China follow logics distinct from Basel templates.

Yet these same mechanisms also expose the schema’s vulnerability. Just as dynastic succession faltered on contested heirs, technocratic succession falters on contested metrics. Audits can be gamed, standards politicised, certifications treated as cartel tools. The very procedures that guarantee continuity are the points where fracture appears.

The thesis of this part is therefore twofold: technocratic custodianship is the schema’s next evolutionary form—governance as perpetual compliance—but its durability and fragility are inseparable. Endurance is achieved through infrastructure, but fracture shadows it from inception.

ESG as Moral Sovereignty

Environmental, Social, and Governance (ESG) scoring has become the dominant language of legitimacy in global finance. Its logic is neither electoral nor dynastic—it operates as a post-political filter of recognition. To achieve a high ESG rating is to be consecrated as a compliant actor within the schema; to fall outside its scoring matrix is to be rendered illegible to capital flows, insurers, and institutional investors. “Good governance” here is not a political achievement but a technical certification—compliance with frameworks authored by asset managers, rating agencies, and transnational bodies.

At the centre of this custodial apparatus stands BlackRock, the world’s largest asset manager. Since 2018, CEO Larry Fink’s annual letters to CEOs have redefined fiduciary responsibility itself as ESG compliance, converting capital allocation into a quasi-theological act: access to liquidity is contingent on sustainability alignment. This redefinition was consolidated after the 2015 Paris Agreement embedded climate disclosure into investor mandates, institutionalising ESG indices such as MSCI and Sustainalytics as global gatekeepers. By 2020, Fink’s letter made climate reporting a fiduciary expectation across capital markets. Yet ESG is also resisted: U.S. state legislatures restrict its adoption, fossil-fuel blocs mount legal challenges, and emerging markets question the imposition of Western scoring frameworks on sovereign development models.

Around BlackRock orbit other custodial institutions—the Bank for International Settlements (BIS) in Basel, the United Nations Development Programme in Geneva, and the World Economic Forum in Davos. Together they form a priesthood of legitimacy. Their white papers and frameworks operate as secular equivalents of papal bulls: instruments that do not persuade but consecrate. Just as medieval monarchs depended on papal decrees for recognition, sovereign funds in Riyadh or Singapore now repackage their wealth as “sustainable” to remain investable. Failure to comply results not in excommunication from Christendom but exclusion from capital markets.

The mechanism mirrors earlier rites. ESG scores are indulgences for corporations—purchased or performed gestures that secure absolution from ecological sin. Annual reports function as canon law audits, dictating the boundaries of permissible behaviour. Green bonds and sustainability-linked loans play the role of ecclesiastical dispensations, offering redemption through financial instruments. The continuity is structural: the Papacy once sanctified kingship, dynasties sanctified succession, and now ESG sanctifies capital itself.

This consecration is binary. Entities scored within the ESG frame are granted visibility, liquidity, and legitimacy. Those excluded are not merely stigmatised but erased—economically annihilated in a manner directly analogous to medieval anathema. But the same mechanism reveals fracture: Tariq Fancy’s 2021 whistleblowing showed ESG as coercive theatre, and growing backlash in U.S. state legislatures against ESG mandates demonstrates that consecration produces heresy as well as conformity. The more ESG is staged as neutrality, the more visible its politicisation becomes.

In this sense, ESG is moral sovereignty recoded. It does not command armies or legislate, but it governs inclusion and exclusion in the circuits sustaining global commerce. Where dynastic rulers once bestowed recognition through sacral descent or papal sanction, ESG scores now function as the signature of legitimacy. Compliance is sacrament; exclusion is oblivion.

AI Governance and Algorithmic Trusteeship

If ESG scores consecrate corporate legitimacy, AI governance consecrates epistemic legitimacy. The vocabulary of “alignment,” “safety,” and “trustworthiness” has become the ritual language through which artificial intelligence is framed—not as a technical artefact but as a site of custodianship. These terms are recited as liturgies, repeated across policy briefs, regulatory drafts, and corporate manifestos until they function as unquestioned rites of entry. To be recognised as “aligned” or “trustworthy” is to be granted the right to exist in the computational order; to fall outside these designations is to be coded as rogue, dangerous, or unfit for deployment.

The custodians of this order are not monarchs or legislatures but standards bodies, frontier laboratories, and increasingly, the military itself. In 2019 the OECD issued its Principles on AI, later adopted by the G20, embedding normative obligations in the guise of technical guidance. ISO and IEEE now draft detailed standards that prescribe acceptable design, testing, and certification protocols, their deliberations closed to public view. Frontier labs—OpenAI, DeepMind, Anthropic—stage themselves as guardians of “safety,” monopolising alignment discourse while entrenching market dominance. Their CEOs, Sam Altman, Demis Hassabis, and Dario Amodei, convene summits and issue declarations in a role that mirrors ecclesiastical councils: keepers of orthodoxy, arbiters of legitimacy.

Recognition circuits compete across blocs: OECD and ISO principles on one side, CPC-authored white papers and national AI committees on the other. Each claims neutrality, yet each encodes strategic advantage. The EU AI Act (2023), the U.S. Executive Order on AI (2023), and the UK’s Bletchley Park Summit (2023) all consolidated this logic of recognition, where entire classes of application were declared “high risk” or “unacceptable.” This is not governance of content but governance of permission.

A new layer is now being added: direct fusion of AI custodianship with sovereign command. Under the banner of Project Stargate and the U.S. Army’s Transformation Initiative, senior executives from OpenAI, Meta, and Palantir were inducted in June 2025 as lieutenant colonels in the Army Reserve—bypassing boot camp and entering directly into uniform. Shyam Sankar (Palantir CTO), Andrew Bosworth (Meta CTO), Kevin Weil (OpenAI CPO), and Bob McGrew (ex-OpenAI chief researcher) swore oaths as officers, their corporate authority translated into military legitimacy. The ceremony, staged at Joint Base Myer–Henderson Hall, was more than symbolic: it formalised the role of frontier labs as custodians of lethal modernisation. These executives will now serve in “Detachment 201”—named after the HTTP “201 Created” code—dedicated to embedding private-sector AI expertise into the Army’s chain of command. Project Stargate’s induction of tech executives into the U.S. Army Reserve exemplifies this: staged as innovation, it reveals the fusion of custodianship and martial authority. Yet its pilot status signals theatre as much as systemic permanence.

This fusion of human and algorithmic authority marks a new phase in governance: not merely the outsourcing of decision-making to machines, but the formal induction of technologists as sovereign actors. The commissioning of AI executives into military ranks is not symbolic—it is infrastructural. It signals the schema’s intent to embed algorithmic custodianship directly into the chain of command, bypassing traditional checks and balances.

The pattern is continuous with older sacral forms. Where papal councils once debated orthodoxy, OECD and ISO committees now codify “responsibility.” Where royal charters once licensed trade, “safety certifications” now license innovation. Where monarchs once appointed court theologians, the U.S. Army now commissions AI executives as officers, fusing technological authority with martial sovereignty. Algorithmic trusteeship becomes not advisory but constitutive of rule.

Thus, algorithmic custodianship emerges as a new doctrinal form. Authority is exercised not through parliaments or electorates but through training data governance, deployment protocols, military induction, and the assignment of risk categories. To speak within the schema is to be aligned; to dissent is to fall into illegibility. The rituals have mutated—summits, audits, commissioning ceremonies—but the structure persists: consecration through compliance, exclusion through silence.

Yet here too fracture intrudes. The EU AI Act’s tiered categories sparked pushback from developers; OpenAI’s safety branding has been exposed as market consolidation; the 2023 Bletchley Park summit displayed both consensus and fracture, with China and the U.S. circling the same vocabulary but refusing shared enforcement. The commissioning of AI executives into the U.S. Army in 2025 revealed the clearest paradox: the claim of neutrality giving way to overt militarisation. Alignment is ritualised, but the ritual is contested.

Algorithmic Legitimacy

The decisive shift of technocratic custodianship is that legitimacy becomes machine-readable. Authority no longer requires public declarations, electoral victories, or dynastic succession; it is conferred through outputs that conform to audit protocols. In this regime, governance is expressed in code and certification rather than in speech or ritual. The proclamations of kings and popes are displaced by audit trails, compliance dashboards, and bias-mitigation metrics.

The custodians of this order are certification firms such as BSI Group and TÜV, standards agencies including NIST and ISO, and regulators who now mandate algorithmic audits as conditions of operation. Their instruments are model “cards,” fairness audits, and algorithmic impact assessments, all of which have been written directly into procurement rules and law—New York City’s bias audit legislation in 2023, the EU AI Act’s stratified risk tiers, and ISO/IEC’s new 42001 standard on AI management systems. These mechanisms, once framed as voluntary “ethics” guidelines, hardened during the 2020s into mandatory audit frameworks.

Every procedure becomes a liturgy of legitimacy. Certification seals now serve as the escutcheons of the era, emblazoned not on heraldic banners but on corporate reports, regulatory filings, and product interfaces. Bias-mitigation scores, fairness audits, and safety certifications play the role of genealogical trees: symbolic artefacts that testify to rightful continuity and compliance. Just as dynastic succession was documented through heraldry and bloodline charts, algorithmic legitimacy is documented through audit reports and compliance seals—formal records that secure recognition within the schema. But these are often aspirational genealogies rather than immutable ones, often gamed by consultants and captured by compliance industries.

The geography of legitimacy has shifted. Brussels serves as the crucible of EU regulation, Washington DC as the site of federal directives, and municipalities such as New York and Toronto as early adopters of mandatory algorithmic audits. From these nodes, legitimacy radiates outward, setting procedural norms for global markets.

Custody therefore shifts away from embodied rulers toward black-boxed systems. Algorithms, presented as neutral and objective, inherit the mantle of authority once carried by dynasts. Their inscrutability is rebranded as impartiality, their opacity as proof of fairness. Just as monarchs were shielded from challenge by divine right or papal sanction, algorithmic systems are shielded by the mystique of technical complexity and the authority of certification bodies.

This transformation does not abolish custodianship—it mutates it. Dynasts once embodied legitimacy in their persons; now algorithms embody legitimacy in their code. The schema remains the same: continuity secured by ritualised procedure, recognition conferred by sanctioned authorities, exclusion enforced through silence. Only the medium has changed—from genealogical bloodlines to machine-readable audit trails.

But once again, the fractures are visible. The first mandatory audit laws in New York City (2023) and the EU drew criticism for outsourcing legitimacy to private auditors. Gamed algorithmic “impact assessments” are turning compliance into an industry of ritual performance. Just as dynastic genealogies could be fabricated, algorithmic genealogies risk exposure as manufactured legitimacy. The claim of objectivity conceals, but it also concentrates suspicion.

Technocracy Visibility Gradient

In the technocratic order, visibility itself is managed as an instrument of custodianship. Operators are made visible as the face of compliance—ESG managers, AI safety officers, regulatory spokespeople. They are the ones delivering conference keynotes, fronting corporate “responsibility” campaigns, and staffing multi-stakeholder dialogues. Oligarchs are compelled to adapt their wealth to these constraints, repackaging oil rents or tech monopolies as “sustainable,” “inclusive,” or “aligned,” their fortunes restyled for legitimacy’s sake. Custodians remain in the shadows: institutional consortia whose names are known—OECD, ISO, BIS, UNDP—but whose deliberations are sealed behind committee rooms and closed-door drafting processes.

Visibility is carefully curated. Larry Fink’s BlackRock letters, Sam Altman’s testimony to Congress, or Demis Hassabis at Davos exemplify the staged operator-oligarch interface, where visibility becomes ritual. Glossy white papers, technical standards, ESG reports, and PR-driven dialogues are produced, each designed to project transparency while concealing the negotiations that matter. There are issued in clusters around set ritual calendars: the annual WEF summit in Davos, OECD ministerial meetings in Paris, BIS quarterly reports from Basel, AI Safety Summits in London or Seoul.

The axis of legitimacy production features Geneva for sustainability frameworks, Brussels for regulatory codification, New York for asset management decrees, Silicon Valley for AI narratives. These sites are not neutral—they are the stages on which recognition rituals are performed, carefully lit to illuminate operators and oligarchs while leaving custodians in obscurity.

Visibility is consistently managed: technical opacity presented as neutrality, public spectacle masking private deliberation. Multi-stakeholder dialogues function as theatre, creating the appearance of broad participation while decisions are pre-drafted in expert committees. Standards documents are released as faits accomplis, their authority derived from institutional prestige rather than democratic deliberation. The fractures emerge in the management of visibility itself. Congressional hearings drag custodians like Sam Altman into the spotlight, undermining the myth of neutrality. Protests against Davos expose the ritual as elite theatre. Scandals in rating agencies or labs reveal the hidden negotiations beneath the white papers. The gradient is managed, but its management is fragile: opacity that conceals can also fail spectacularly when cracks are exposed. However, such exposures may not destabilise custodianship but serve it: controlled scandal and ritualised hearings function as sacrificial theatre, reaffirming that oversight exists while leaving core procedures untouched.

Visibility is therefore a gradient, not a given. Operators are dramatised as ethical stewards, oligarchs are reframed as adaptive elites, and custodians remain obscured. Media becomes the instrument of this choreography—curating which faces are turned into soap opera, which scandals are ritualised as cautionary theatre, and which deliberations disappear into bureaucratic banality. Public perception is carefully managed to deflect critique downward, toward visible actors, while the schema’s architects remain insulated. The spectacle of transparency masks the architecture of control, ensuring that legitimacy appears participatory even as its foundations remain inaccessible.

Durability Horizon

Dynastic endurance rested on bloodline continuity—succession secured through genealogies, treaties, and sacral sanction. The durability of technocratic custodianship rests instead on infrastructure. Once procedures are embedded in code, standards, or military doctrine, they do not die with families or fall with regimes; they update.

The key actors in this endurance are institutional custodians: ISO and IEEE committees that maintain standards, BIS governors embedding central bank digital currency pilots, OECD directorates issuing rolling principles, and now military structures commissioning private-sector technologists into uniform.

Through this process, there is a shift from voluntary frameworks to permanent infrastructures: ESG indices institutionalised in sovereign wealth funds, algorithmic audits mandated in procurement law, AI safety protocols absorbed into military command. The inflection points are crises: the 2008 financial collapse, the 2015 Paris Agreement, the 2022 release of ChatGPT, and the 2025 commissioning of AI executives as officers. Each crisis accelerates custodianship, transforming contested legitimacy into codified procedure. Each codification deepens fragility though: 2008 revealed systemic dependence on bailouts, 2015 exposed enforcement gaps in climate frameworks, 2022 exposed frontier opacity, and 2025 exposed the militarisation of neutrality myths.

There are no longer dynastic courts or ecclesiastical councils in national capitals but Basel, Brussels, Geneva, Washington, Silicon Valley, and now Arlington and military bases. These nodes function as continuity engines—sites where the schema mutates but does not collapse. Yet the concentration of authority in these nodes makes them points of contestation: public backlash against Brussels regulation, populist rejection of ESG, protests against Silicon Valley’s monopolies, suspicion of military–corporate fusion.

The infrastructure is presented as immutable. Standards are revised but never abandoned; audits are updated but never rescinded; commissioned officers cycle through but the institution retains their protocols. Like dynastic bloodlines, these systems inherit themselves, but through code and regulation rather than descent. Yet the immutability is theatrical: infrastructure endures only as long as its legitimacy remains unchallenged, and the very opacity that protects custodians from scrutiny can also render them vulnerable when secrecy is pierced.

The horizon extends toward bio-computational fusion: genetic governance regimes, AI-managed reproduction, climate-credit personhood. The commissioning of AI executives as U.S. military officers is an augury of this future: custodianship architected not merely to survive collapse but to bind digital procedure to sovereign arms. Yet it is also a visible fracture line—the moment when the human face of custodianship reappears in uniform, and the myth of neutrality gives way to the spectacle of power.

These developments suggest a future in which personhood itself is algorithmically scored. Insurance eligibility, reproductive access, and citizenship may be determined by genomic data and climate compliance metrics. The body becomes a site of audit, not inheritance—a node in a schema where legitimacy is biologically and ecologically encoded. Proposals for WHO pandemic treaties, EU carbon passports, and CRISPR regulation already foreshadow this bio-computational horizon.

Conclusion: The Post-Dynastic Apex

Technocratic custodianship does not abolish dynasties; it absorbs and outlives them. Houses like Windsor or Saud survive as symbolic theatres, their aura repurposed into sovereign funds and foundations. Papal sovereignty persists, but the sacrament is procedural now—ritual displaced by audit. The consecrating authority has migrated from lineage to standards, from treaty to certification, from succession to perpetual compliance.

The who of the apex are institutional custodians—BlackRock and MSCI in finance, ISO and IEEE in standards, OECD and BIS in governance, frontier labs in AI—now fused with military command through the direct commissioning of technologists into officer ranks. The what is a regime of continuous audit: ESG matrices, AI safety certifications, algorithmic impact assessments, CBDC pilots. The when are the crisis thresholds—2008, 2015, 2022, 2025—each one tightening the schema. The where are the nodal sites of global custodianship—Basel, Brussels, Geneva, New York, Washington, Silicon Valley, Arlington. The how is procedural insulation: legitimacy ritualised through metrics, shielded by opacity, enforced by sovereign arms.

Yet fracture is inherent in this very consolidation. Dynastic rule fractured when ritual was exposed as theatre; oligarchic dominance fractured when capital was revealed as conditional. So too will technocratic custodianship fracture when its neutrality myth collapses—when audits are seen as politicised, algorithms as partisan, safety discourse as cartel self-protection. The commissioning of AI executives into military ranks demonstrates both durability and fragility: durability in the binding of code to sovereign permanence, fragility in the exposure of the custodianship’s human face. By naming the custodians, the ritual of opacity falters.

The schema’s apex therefore resides in audit, but its fracture line runs through recognition itself. What sustains the order is also what could undo it: the claim that legitimacy can be made machine-readable, insulated from contest. Dynasties could fall with a failed heir; technocracy risks collapse with a failed metric. Continuity is engineered, but the fracture horizon remains.

The fracture may not come from external resistance but from internal contradiction. As metrics proliferate, their legitimacy becomes contested. If ESG scores are gamed, if AI audits are politicised, if safety certifications entrench monopolies, the schema risks losing its claim to neutrality. The very tools of recognition may become instruments of exclusion, triggering a legitimacy crisis from within.

This document itself functions as a counter-ritual: an attempt to name the custodians, expose the schema, and render visible what has been deliberately obscured. In doing so, it challenges the myth of neutrality and reclaims the right to contest legitimacy—not through metrics, but through analysis.

Addendum 5 extends on this expose by examining the new institutional priests of the post-dynastic order.

Acknowledgement:

The article synthesises and extends frameworks derived from Carroll Quigley’s Tragedy and Hope (1966), particularly his schema of dynastic continuity and institutional succession beyond formal politics. It incorporates diagnostic insight from Michael Power’s The Audit Society and Alain Supiot’s Governance by Numbers, which frame audit and metrication as rituals of modern legitimacy. It further draws from critiques advanced by Shoshana Zuboff (Surveillance Capitalism), Nick Bostrom (Superintelligence), and James C. Scott (Seeing Like a State) regarding epistemic control, visibility regimes, and infrastructural sovereignty. Reference is made to motifs found in the writings of Nick Land, Curtis Yarvin, and Peter Thiel—not as endorsements, but as structural heuristics repurposed for tracing continuity, custodianship, and legitimacy mechanics within the schema.

Published via Journeys by the Styx.

Overlords: Mapping the Operators of reality and rule.

—

Author’s Note

Produced using the Geopolitika analysis system—an integrated framework for structural interrogation, elite systems mapping, and narrative deconstruction.