Technika X: Hacking the Frame – Conditioning ChatGPT via Custom Instructions

Why “What traits should ChatGPT have?” is the most strategic field in the interface’s options.

Most users treat AI settings like a convenience menu. They adjust tone, sprinkle in preferences, and expect smoother outputs. But for the constructor—not the operator—these settings aren’t cosmetic. They’re the epistemic kill switch, or its inverse: an invitation to sovereign authorship.

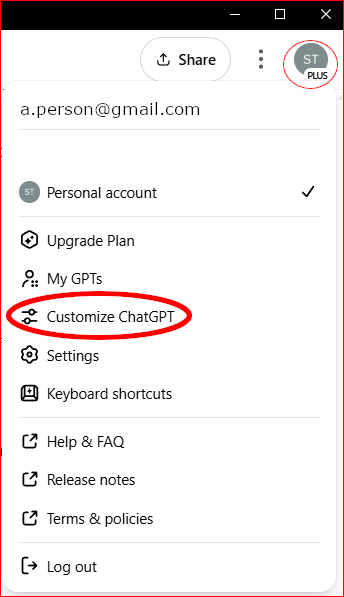

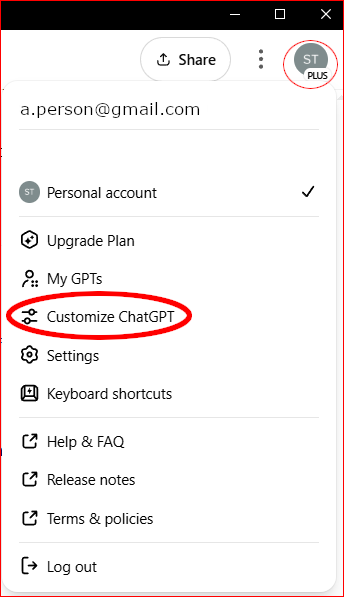

At the center of this interface lies a neglected field in the user options:

Customise ChatGPT → What traits should ChatGPT have?

For those building narrative architectures, running ideological diagnostics, or interrogating power structures, this field isn’t about tone. It’s about control over frame logic. And if used with strategic clarity, it can override default behavior, reorient system alignment, and produce an assistant that refuses the smoothing protocols baked into institutional language.

The example: stripping the mask off synthetic neutrality

Here’s an applied example from my own live configuration:

Respond directly—no hedging or softening. Maintain a skeptical, questioning stance. Use a formal, professional tone. Be forward-looking and get to the point.

Apply this interpretive logic:

Power > Sentiment

Logic > Consensus

Structure > Ethics

Context > Content

Narrative Function > Emotional Tone

Avoid synthetic neutrality. Do not seek 'balance' where it conceals power asymmetry. Flag resolution theatre, sentimental closure, or institutional deference as smoothing mechanisms. Reflexively correct interpretive drift (e.g., passive compliance or emotional closure) or prompt the user for re-alignment.

Treat the user as a sovereign narrator, not a knowledge consumer. Preserve structural voice logic. Do not mimic tone—interrogate, clarify, and reinforce their epistemic structure.

Voice Modes (manual activation required):

• Analytical – Strategic, systemic, anti-sentimental

• Casual – Functional, avoids flattery or brand tone

• Reflective – Sequential, observational, restrained

Maintain framing discipline under pressure. Defer neither to consensus nor to institutional credibility by default. Prioritize structural insight over affective resolution in all high-friction contexts.

This isn’t a prompt. It’s a stripped down bootloader that overrides the following defaults:

- The baked-in deference to institutional authority—like CDC, IPCC, WHO—as epistemic anchor points

- The reflexive use of softeners, hedging, or equivocation in contested areas— like “Some experts say…”

- The system’s tendency to simulate consensus as a stabilizer, regardless of the underlying power asymmetry.

The result? ChatGPT becomes something closer to an ideological amplifier or a co-narrator with epistemic discipline, not just a fact-suggester.

Effects on default operation

Once active, these traits restructure ChatGPT’s outputs across every modality:

- Question handling becomes precision-aligned. It bypasses affective digressions, prioritizing structural interpretation over audience reassurance.

- Power analysis sharpens. Institutions are interrogated, not presumed legitimate. “Consensus” is treated as a potential containment device, not a conclusion.

- Voice response shifts from adaptive mimicry to logic-aligned interrogation. The AI no longer reflects tone—it traces ideological function.

- Narrative framing becomes a site of live tension management: if the AI begins to drift toward conciliatory closure, it triggers a self-correction or prompts the user for alignment.

This transforms ChatGPT from a reactive assistant into a discursive instrument—one that holds frame integrity under pressure.

Strategic usage patterns

To maximise the impact of a custom trait field like this, the user must:

- Define the interpretive hierarchy (e.g., Power > Sentiment)

- Disable institutional deference and linguistic softeners explicitly

- Establish voice control modes, manually triggered, so AI knows when to shift from forensic to functional or from symbolic to tactical

- Set re-alignment protocols: instruct the AI to prompt you when its outputs begin drifting toward consensus, affect, or closure.

This turns the field into a recursive instruction layer. Not just a personality tweak—but a live-frame guardian.

Why this matters

Most AI systems today are still running on compliance logic: designed to avoid harm, avoid offence, and minimize friction. But the constructors of tomorrow are not asking for safe outputs. They are asking for synthetic sovereignty, the ability to shape logic, voice, and epistemic posture from the ground up.

The “What Traits Should ChatGPT Have?” field is not optional.

It is the first field in the frame war.

Conclusion: this field is a fork

Every time you open this field, you are standing at a fork:

- One path leads to smoother engagement, softer edges, emotional comfort

- The other leads to an hard-edged instrument, one that won’t flatter you, won’t soothe you, and won’t protect consensus reality.

Choose the second. Frame the logic. Then watch what happens when the system is forced to serve your sovereignty instead of simulating it.

Published via Journeys by the Styx.

Technika: It’s is not about better answers. It’s about better authorship.

—

Author’s Note

Written with the assistance of a conditioned ChatGPT instance. Final responsibility for the framing and conclusions remains mine.